人工智能太疯狂,传统劳动力和内容创作平台被AI枪毙,弃尸尘埃。并非空穴来风,也不是危言耸听,人工智能AI图像增强框架ControlNet正在疯狂地改写绘画艺术的发展进程,你问我绘画行业未来的样子?我只好指着ControlNet的方向。本次我们在M1/M2芯片的Mac系统下,体验人工智能登峰造极的绘画艺术。

ControlNet在HuggingFace训练平台上也有体验版,请参见: https://huggingface.co/spaces/hysts/ControlNet

但由于公共平台算力有限,同时输入参数也受到平台的限制,一次只能训练一张图片,不能让人开怀畅饮。

为了能和史上最伟大的图像增强框架ControlNet一亲芳泽,我们选择本地搭建ControlNet环境,首先运行Git命令拉取官方的线上代码:

git clone https://github.com/lllyasviel/ControlNet.git

复制拉取成功后,进入项目目录:

cd ControlNet

复制由于Github对文件大小有限制,所以ControlNet的训练模型只能单独下载,模型都放在HuggingFace平台上:https://huggingface.co/lllyasviel/ControlNet/tree/main/models,需要注意的是,每个模型的体积都非常巨大,达到了5.71G,令人乍舌。

下载好模型后,需要将其放到ControlNet的models目录中:

├── models │ ├── cldm_v15.yaml │ ├── cldm_v21.yaml │ └── control_sd15_canny.pth复制

这里笔者下载了control_sd15_canny.pth模型,即放入models目录中,其他模型也是一样。

随后安装运行环境,官方推荐使用conda虚拟环境,安装好conda后,运行命令激活虚拟环境即可:

conda env create -f environment.yaml

conda activate control

复制但笔者查看了官方的environment.yaml配置文件:

name: control

channels:

- pytorch

- defaults

dependencies:

- python=3.8.5

- pip=20.3

- cudatoolkit=11.3

- pytorch=1.12.1

- torchvision=0.13.1

- numpy=1.23.1

- pip:

- gradio==3.16.2

- albumentations==1.3.0

- opencv-contrib-python==4.3.0.36

- imageio==2.9.0

- imageio-ffmpeg==0.4.2

- pytorch-lightning==1.5.0

- omegaconf==2.1.1

- test-tube>=0.7.5

- streamlit==1.12.1

- einops==0.3.0

- transformers==4.19.2

- webdataset==0.2.5

- kornia==0.6

- open_clip_torch==2.0.2

- invisible-watermark>=0.1.5

- streamlit-drawable-canvas==0.8.0

- torchmetrics==0.6.0

- timm==0.6.12

- addict==2.4.0

- yapf==0.32.0

- prettytable==3.6.0

- safetensors==0.2.7

- basicsr==1.4.2

复制一望而知,Python版本是老旧的3.8,Torch版本1.12并不支持Mac独有的Mps训练模式。

同时,Conda环境也有一些缺点:

环境隔离可能会导致一些问题。虽然虚拟环境允许您管理软件包的版本和依赖关系,但有时也可能导致环境冲突和奇怪的错误。

Conda环境可以占用大量磁盘空间。每个环境都需要独立的软件包副本和依赖项。如果需要创建多个环境,这可能会导致磁盘空间不足的问题。

软件包可用性和兼容性也可能是一个问题。Conda环境可能不包含某些软件包或库,或者可能不支持特定操作系统或硬件架构。

在某些情况下,Conda环境的创建和管理可能会变得复杂和耗时。如果需要管理多个环境,并且需要在这些环境之间频繁切换,这可能会变得困难。

所以我们也可以用最新版的Python3.10来构建ControlNet训练环境,编写requirements.txt文件:

pytorch==1.13.0 gradio==3.16.2 albumentations==1.3.0 opencv-contrib-python==4.3.0.36 imageio==2.9.0 imageio-ffmpeg==0.4.2 pytorch-lightning==1.5.0 omegaconf==2.1.1 test-tube>=0.7.5 streamlit==1.12.1 einops==0.3.0 transformers==4.19.2 webdataset==0.2.5 kornia==0.6 open_clip_torch==2.0.2 invisible-watermark>=0.1.5 streamlit-drawable-canvas==0.8.0 torchmetrics==0.6.0 timm==0.6.12 addict==2.4.0 yapf==0.32.0 prettytable==3.6.0 safetensors==0.2.7 basicsr==1.4.2复制

随后,运行命令:

pip3 install -r requirements.txt复制

至此,基于Python3.10来构建ControlNet训练环境就完成了,关于Python3.10的安装,请移玉步至:一网成擒全端涵盖,在不同架构(Intel x86/Apple m1 silicon)不同开发平台(Win10/Win11/Mac/Ubuntu)上安装配置Python3.10开发环境,这里不再赘述。

ControlNet的代码中将训练模式写死为Cuda,CUDA是NVIDIA开发的一个并行计算平台和编程模型,因此不支持NVIDIA GPU的系统将无法运行CUDA训练模式。

除此之外,其他不支持CUDA训练模式的系统可能包括:

没有安装NVIDIA GPU驱动程序的系统

没有安装CUDA工具包的系统

使用的NVIDIA GPU不支持CUDA(较旧的GPU型号可能不支持CUDA)

没有足够的GPU显存来运行CUDA训练模式(尤其是在训练大型深度神经网络时需要大量显存)

需要注意的是,即使系统支持CUDA,也需要确保所使用的机器学习框架支持CUDA,否则无法使用CUDA进行训练。

我们可以修改代码将训练模式改为Mac支持的Mps,请参见:闻其声而知雅意,M1 Mac基于PyTorch(mps/cpu/cuda)的人工智能AI本地语音识别库Whisper(Python3.10),这里不再赘述。

如果代码运行过程中,报下面的错误:

RuntimeError: Attempting to deserialize object on a CUDA device but torch.cuda.is_available() is False. If you are running on a CPU-only machine, please use torch.load with map_location=torch.device('cpu') to map your storages to the CPU.

复制说明当前系统不支持cuda模型,需要修改几个地方,以项目中的gradio_canny2image.py为例子,需要将gradio_canny2image.py文件中的cuda替换为cpu,同时修改/ControlNet/ldm/modules/encoders/modules.py文件,将cuda替换为cpu,修改/ControlNet/cldm/ddim_hacked.py文件,将cuda替换为cpu。至此,训练模式就改成cpu了。

修改完代码后,直接在终端运行gradio_canny2image.py文件:

python3 gradio_canny2image.py复制

程序返回:

➜ ControlNet git:(main) ✗ /opt/homebrew/bin/python3.10 "/Users/liuyue/wodfan/work/ControlNet/gradio_cann

y2image.py"

logging improved.

No module 'xformers'. Proceeding without it.

/opt/homebrew/lib/python3.10/site-packages/pytorch_lightning/utilities/distributed.py:258: LightningDeprecationWarning: `pytorch_lightning.utilities.distributed.rank_zero_only` has been deprecated in v1.8.1 and will be removed in v2.0.0. You can import it from `pytorch_lightning.utilities` instead.

rank_zero_deprecation(

ControlLDM: Running in eps-prediction mode

DiffusionWrapper has 859.52 M params.

making attention of type 'vanilla' with 512 in_channels

Working with z of shape (1, 4, 32, 32) = 4096 dimensions.

making attention of type 'vanilla' with 512 in_channels

Loaded model config from [./models/cldm_v15.yaml]

Loaded state_dict from [./models/control_sd15_canny.pth]

Running on local URL: http://0.0.0.0:7860

To create a public link, set `share=True` in `launch()`.

复制此时,在本地系统的7860端口上会运行ControlNet的Web客户端服务。

访问 http://localhost:7860,就可以直接上传图片进行训练了。

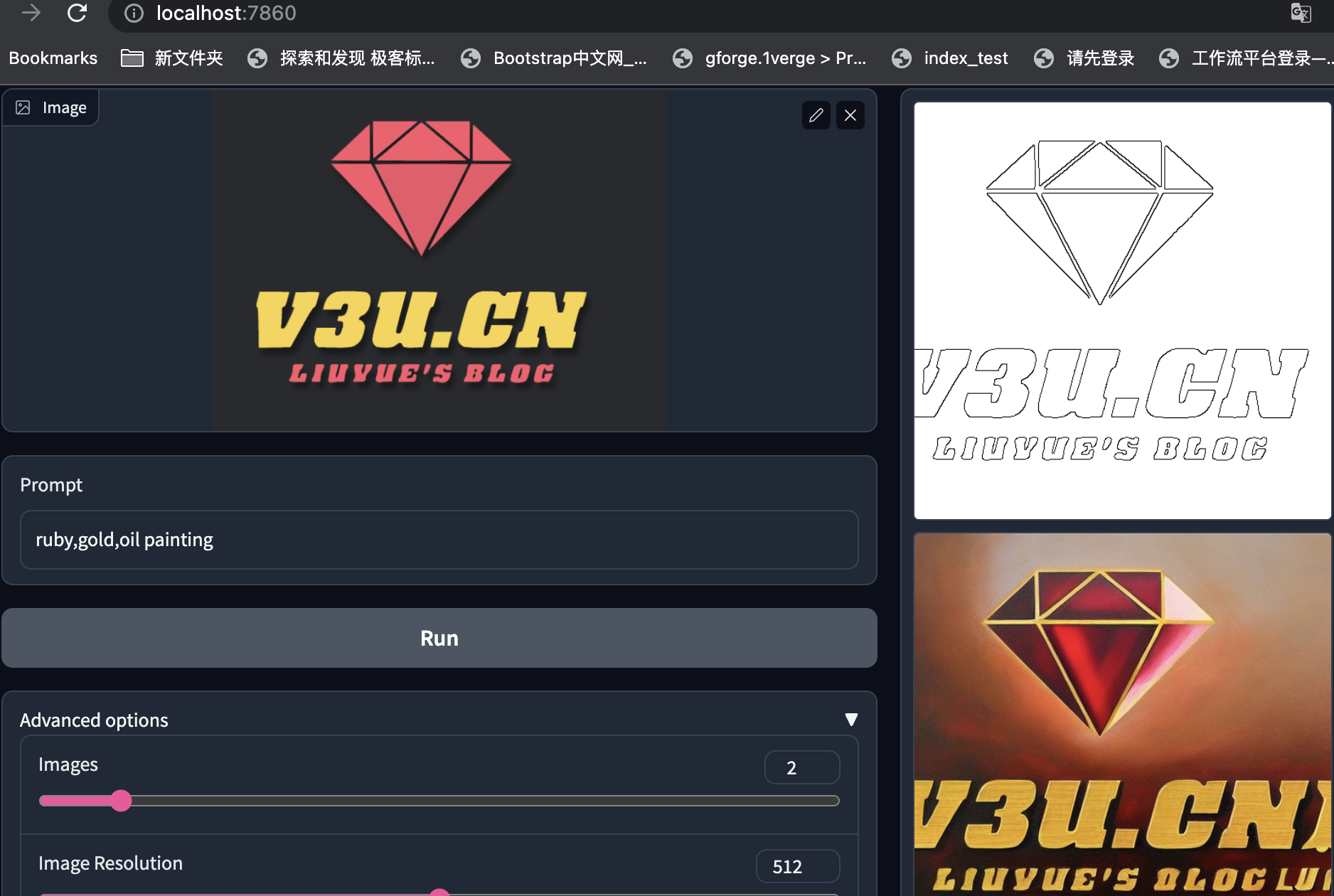

这里以本站的Logo图片为例子:

通过输入引导词和其他训练参数,就可以对现有图片进行扩散模型的增强处理,这里的引导词的意思是:红宝石、黄金、油画。训练结果可谓是言有尽而意无穷了。

除了主引导词,系统默认会添加一些辅助引导词,比如要求图像品质的best quality, extremely detailed等等,完整代码:

from share import *

import config

import cv2

import einops

import gradio as gr

import numpy as np

import torch

import random

from pytorch_lightning import seed_everything

from annotator.util import resize_image, HWC3

from annotator.canny import CannyDetector

from cldm.model import create_model, load_state_dict

from cldm.ddim_hacked import DDIMSampler

apply_canny = CannyDetector()

model = create_model('./models/cldm_v15.yaml').cpu()

model.load_state_dict(load_state_dict('./models/control_sd15_canny.pth', location='cpu'))

model = model.cpu()

ddim_sampler = DDIMSampler(model)

def process(input_image, prompt, a_prompt, n_prompt, num_samples, image_resolution, ddim_steps, guess_mode, strength, scale, seed, eta, low_threshold, high_threshold):

with torch.no_grad():

img = resize_image(HWC3(input_image), image_resolution)

H, W, C = img.shape

detected_map = apply_canny(img, low_threshold, high_threshold)

detected_map = HWC3(detected_map)

control = torch.from_numpy(detected_map.copy()).float().cpu() / 255.0

control = torch.stack([control for _ in range(num_samples)], dim=0)

control = einops.rearrange(control, 'b h w c -> b c h w').clone()

if seed == -1:

seed = random.randint(0, 65535)

seed_everything(seed)

if config.save_memory:

model.low_vram_shift(is_diffusing=False)

cond = {"c_concat": [control], "c_crossattn": [model.get_learned_conditioning([prompt + ', ' + a_prompt] * num_samples)]}

un_cond = {"c_concat": None if guess_mode else [control], "c_crossattn": [model.get_learned_conditioning([n_prompt] * num_samples)]}

shape = (4, H // 8, W // 8)

if config.save_memory:

model.low_vram_shift(is_diffusing=True)

model.control_scales = [strength * (0.825 ** float(12 - i)) for i in range(13)] if guess_mode else ([strength] * 13) # Magic number. IDK why. Perhaps because 0.825**12<0.01 but 0.826**12>0.01

samples, intermediates = ddim_sampler.sample(ddim_steps, num_samples,

shape, cond, verbose=False, eta=eta,

unconditional_guidance_scale=scale,

unconditional_conditioning=un_cond)

if config.save_memory:

model.low_vram_shift(is_diffusing=False)

x_samples = model.decode_first_stage(samples)

x_samples = (einops.rearrange(x_samples, 'b c h w -> b h w c') * 127.5 + 127.5).cpu().numpy().clip(0, 255).astype(np.uint8)

results = [x_samples[i] for i in range(num_samples)]

return [255 - detected_map] + results

block = gr.Blocks().queue()

with block:

with gr.Row():

gr.Markdown("## Control Stable Diffusion with Canny Edge Maps")

with gr.Row():

with gr.Column():

input_image = gr.Image(source='upload', type="numpy")

prompt = gr.Textbox(label="Prompt")

run_button = gr.Button(label="Run")

with gr.Accordion("Advanced options", open=False):

num_samples = gr.Slider(label="Images", minimum=1, maximum=12, value=1, step=1)

image_resolution = gr.Slider(label="Image Resolution", minimum=256, maximum=768, value=512, step=64)

strength = gr.Slider(label="Control Strength", minimum=0.0, maximum=2.0, value=1.0, step=0.01)

guess_mode = gr.Checkbox(label='Guess Mode', value=False)

low_threshold = gr.Slider(label="Canny low threshold", minimum=1, maximum=255, value=100, step=1)

high_threshold = gr.Slider(label="Canny high threshold", minimum=1, maximum=255, value=200, step=1)

ddim_steps = gr.Slider(label="Steps", minimum=1, maximum=100, value=20, step=1)

scale = gr.Slider(label="Guidance Scale", minimum=0.1, maximum=30.0, value=9.0, step=0.1)

seed = gr.Slider(label="Seed", minimum=-1, maximum=2147483647, step=1, randomize=True)

eta = gr.Number(label="eta (DDIM)", value=0.0)

a_prompt = gr.Textbox(label="Added Prompt", value='best quality, extremely detailed')

n_prompt = gr.Textbox(label="Negative Prompt",

value='longbody, lowres, bad anatomy, bad hands, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality')

with gr.Column():

result_gallery = gr.Gallery(label='Output', show_label=False, elem_id="gallery").style(grid=2, height='auto')

ips = [input_image, prompt, a_prompt, n_prompt, num_samples, image_resolution, ddim_steps, guess_mode, strength, scale, seed, eta, low_threshold, high_threshold]

run_button.click(fn=process, inputs=ips, outputs=[result_gallery])

block.launch(server_name='0.0.0.0')

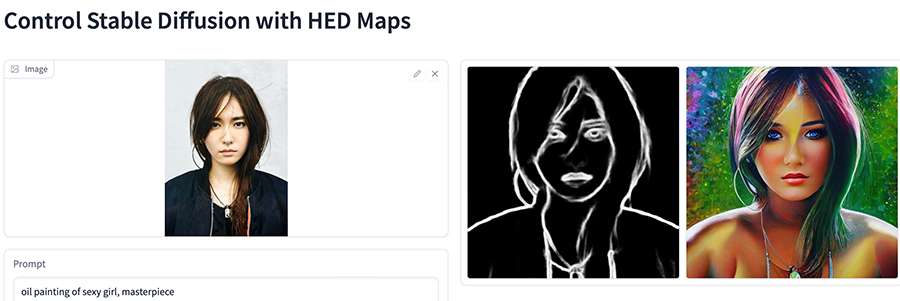

复制其他的模型,比如gradio_hed2image.py,它可以保留输入图像中的许多细节,适合图像的重新着色和样式化的场景:

还记得AnimeGANv2模型吗:神工鬼斧惟肖惟妙,M1 mac系统深度学习框架Pytorch的二次元动漫动画风格迁移滤镜AnimeGANv2+Ffmpeg(图片+视频)快速实践,之前还只能通过统一模型滤镜进行转化,现在只要修改引导词,我们就可以肆意地变化出不同的滤镜,人工智能技术的发展,就像发情的海,汹涌澎湃。

“人类嘛时候会被人工智能替代呀?”

“就是现在!就在今天!”

就算是达芬奇还魂,齐白石再生,他们也会被现今的人工智能AI技术所震撼,纵横恣肆的笔墨,抑扬变化的形态,左右跌宕的心气,焕然飞动的神采!历史长河中这一刻,大千世界里这一处,让我们变得疯狂!

最后奉上修改后的基于Python3.10的Cpu训练版本的ControlNet,与众亲同飨:https://github.com/zcxey2911/ControlNet_py3.10_cpu_NoConda