overlay IPAM 指定子网范围

指定ip空间

docker network create -d overlay --subnet 10.22.1.0/24 ov_net3

https://www.cnblogs.com/machangwei-8/p/15757120.html复制

前提:已部署好docker服务

服务预计部署情况如下

10.0.0.134 Consul服务

10.0.0.135 host1 主机名mcw5

10.0.0.134 host2 主机名mcw6

host1与host2通过Consul这个key-value数据库,来报错网络状态信息,用于跨主机容器间通信。包括Network、Endpoint、IP等。其它数据库还可以使用Etcd,Zookeeper

[root@mcw4 ~]$ docker run -d -p 8500:8500 -h consul --name consul progrium/consul -server -bootstrap

Unable to find image 'progrium/consul:latest' locally

latest: Pulling from progrium/consul

Image docker.io/progrium/consul:latest uses outdated schema1 manifest format. Please upgrade to a schema2 image for better future compatibility. More information at https://docs.docker.com/registry/spec/deprecated-schema-v1/

c862d82a67a2: Already exists

0e7f3c08384e: Already exists

0e221e32327a: Already exists

09a952464e47: Already exists

60a1b927414d: Already exists

4c9f46b5ccce: Already exists

417d86672aa4: Already exists

b0d47ad24447: Pull complete

fd5300bd53f0: Pull complete

a3ed95caeb02: Pull complete

d023b445076e: Pull complete

ba8851f89e33: Pull complete

5d1cefca2a28: Pull complete

Digest: sha256:8cc8023462905929df9a79ff67ee435a36848ce7a10f18d6d0faba9306b97274

Status: Downloaded newer image for progrium/consul:latest

3fc6e630abc28f4add16eb8448fa069dfd52eab0c2b1b6a4f0f5cbb6311f4f9f

[root@mcw4 ~]$ cat /etc/docker/daemon.json #由于拉取镜像总是各种卡住不动,于是添加了后两个镜像加速地址,重启docker之后,很快就拉取成功了

{"registry-mirrors":["https://reg-mirror.qiniu.com/","https://docker.mirrors.ustc.edu.cn/","https://hub-mirror.c.163.com/"]}复制

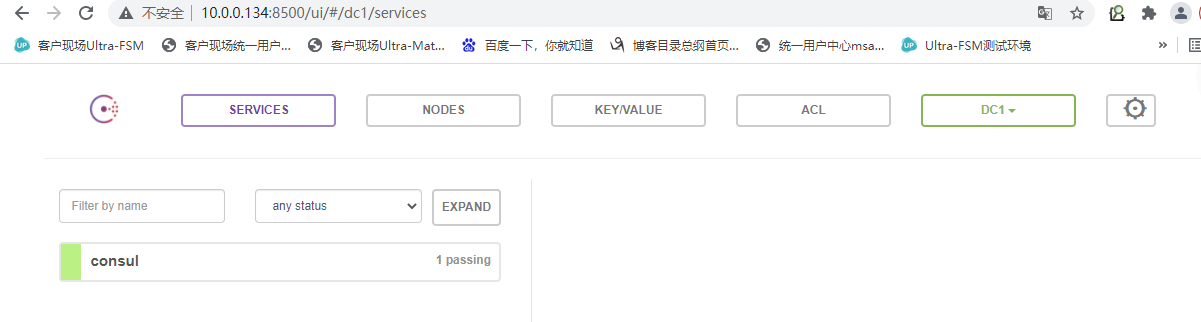

访问url:http://10.0.0.134:8500/

最终也就是如下:

容器连接端:ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock -H tcp://0.0.0.0:2376 --cluster-store=consul://10.0.0.134:8500 --cluster-advertise=ens33:2376

consul服务端:ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0 -H fd:// --containerd=/run/containerd/containerd.sock

不过服务端设置成了swarm的管理端了,不太确定是否一定要这样设置,详情如下:

vim /usr/lib/systemd/system/docker.service

原来的:

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

改成了:

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock -H tcp://0.0.0.0:2376 --cluser-store=consul://10.0.0.134:8500 --cluster-advertise=ens33:2376

--cluser-store : 指定consul的地址

--cluster-advertise:告知consul自己的地址

[root@host1 ~]$ ip a|grep ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 10.0.0.135/24 brd 10.0.0.255 scope global ens33

重启docker daemon

刚刚consul://10.0.0.134:8500 的ip写错了,写成host1的ip10.0.0.135了,docker daemon起不来

[root@host1 ~]$ systemctl daemon-reload

[root@host1 ~]$ systemctl start docker.service

[root@host1 ~]$ ps -ef|grep docker

root 54038 1 0 13:12 ? 00:00:00 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock -H tcp://0.0.0.0:2375

root 54229 52492 0 13:13 pts/0 00:00:00 grep --color=auto docker

[root@host1 ~]$ grep ExecStart /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock -H tcp://0.0.0.0:2376 --cluser-store=consul://10.0.0.134:8500 --cluster-advertise=ens33:2376复制

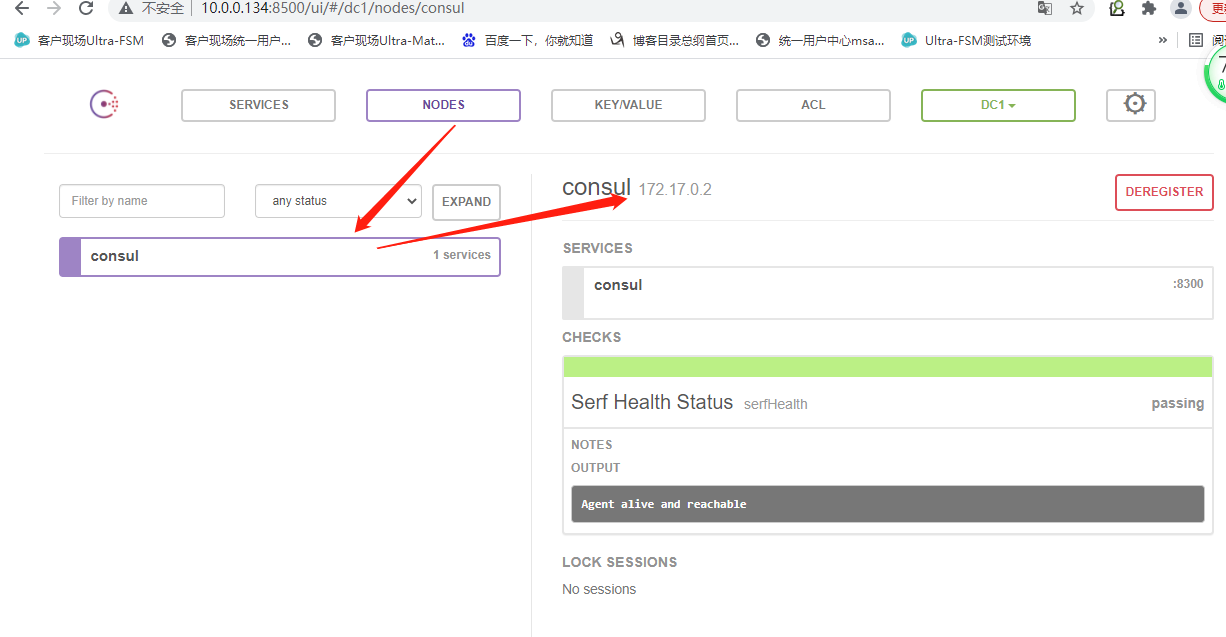

在consul页面上查看两个host是否注册进来了,结果是没有

上面的ip,是consul容器ip

[root@mcw4 ~]$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 3fc6e630abc2 progrium/consul "/bin/start -server …" 34 minutes ago Up 34 minutes 53/tcp, 53/udp, 8300-8302/tcp, 8400/tcp, 8301-8302/udp, 0.0.0.0:8500->8500/tcp, :::8500->8500/tcp consul [root@mcw4 ~]$ docker exec -it consul hostname -i 172.17.0.2 ---- consul主机的docker服务加个参数-H tcp://0.0.0.0:2376,结果host1的docker服务重启,起不来了 [root@mcw4 ~]$ grep ExecStart /usr/lib/systemd/system/docker.service ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock [root@mcw4 ~]$ vim /usr/lib/systemd/system/docker.service [root@mcw4 ~]$ grep ExecStart /usr/lib/systemd/system/docker.service ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock -H tcp://0.0.0.0:2376 [root@mcw4 ~]$ systemctl daemon-reload [root@mcw4 ~]$ systemctl restart docker.service [root@mcw4 ~]$ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 3fc6e630abc2 progrium/consul "/bin/start -server …" 40 minutes ago Exited (1) 47 seconds ago consul [root@mcw4 ~]$ docker start 3fc 3fc 我靠,又是手打写错了cluster-store写成了cluser-store ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock -H tcp://0.0.0.0:2376 --cluser-store=consul://10.0.0.134:8500 --cluster-advertise=ens33:2376 应为: ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock -H tcp://0.0.0.0:2376 --cluster-store=consul://10.0.0.134:8500 --cluster-advertise=ens33:2376 修改之后重启docker daemon [root@host1 ~]$ vim /usr/lib/systemd/system/docker.service [root@host1 ~]$ grep ExecStart /usr/lib/systemd/system/docker.service ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock -H tcp://0.0.0.0:2376 --cluster-store=consul://10.0.0.134:8500 --cluster-advertise=ens33:2376 [root@host1 ~]$ systemctl daemon-reload [root@host1 ~]$ systemctl restart docker.service 发现consul页面上还是没有多出来host1 把之前的consul机子上的配置,再改成加-H tcp://0.0.0.0:2376 ,运行其它主机来访问的方式,然后重启docker daemon consul主机上添加了,但是还是没有主机注册进来 [root@mcw4 ~]$ vim /usr/lib/systemd/system/docker.service [root@mcw4 ~]$ systemctl daemon-reload [root@mcw4 ~]$ systemctl restart docker.service [root@mcw4 ~]$ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 3fc6e630abc2 progrium/consul "/bin/start -server …" 59 minutes ago Exited (1) 26 seconds ago consul [root@mcw4 ~]$ docker start 3fc 3fc [root@mcw4 ~]$ grep ExecStart /usr/lib/systemd/system/docker.service ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock -H tcp://0.0.0.0:2376 上面的容器弃用了,用下面的 [root@mcw4 ~]$ systemctl daemon-reload [root@mcw4 ~]$ systemctl restart docker.service [root@mcw4 ~]$ docker run -d --network host -h consul --name=consul -p 8500:8500 --restart=always -e CONSUL_BIND_INTERFACE=ens33 consul Unable to find image 'consul:latest' locally latest: Pulling from library/consul 5758d4e389a3: Pull complete 57a5fd22f94c: Pull complete f7e2614f51b4: Pull complete e98e494e7397: Pull complete 35e8cfc01eae: Pull complete ea1f421022a9: Pull complete Digest: sha256:05d70d30639d5e0411f92fb75dd670ec1ef8fa4a918c6e57960db1710fd38125 Status: Downloaded newer image for consul:latest docker: Error response from daemon: Conflict. The container name "/consul" is already in use by container "3fc6e630abc28f4add16eb8448fa069dfd52eab0c2b1b6a4f0f5cbb6311f4f9f". You have to remove (or rename) that container to be able to reuse that name. See 'docker run --help'. [root@mcw4 ~]$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES [root@mcw4 ~]$ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 3fc6e630abc2 progrium/consul "/bin/start -server …" 2 hours ago Exited (1) 4 minutes ago consul [root@mcw4 ~]$ docker run -d --network host -h consul --name=consul2 -p 8500:8500 --restart=always -e CONSUL_BIND_INTERFACE=ens33 consul WARNING: Published ports are discarded when using host network mode #使用consuls2 f425838bfa074977cbbfe98d5c9cc4267aa9b89baa4deb9b19d3997f33134129 [root@mcw4 ~]$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES f425838bfa07 consul "docker-entrypoint.s…" 18 seconds ago Up 16 seconds consul2复制

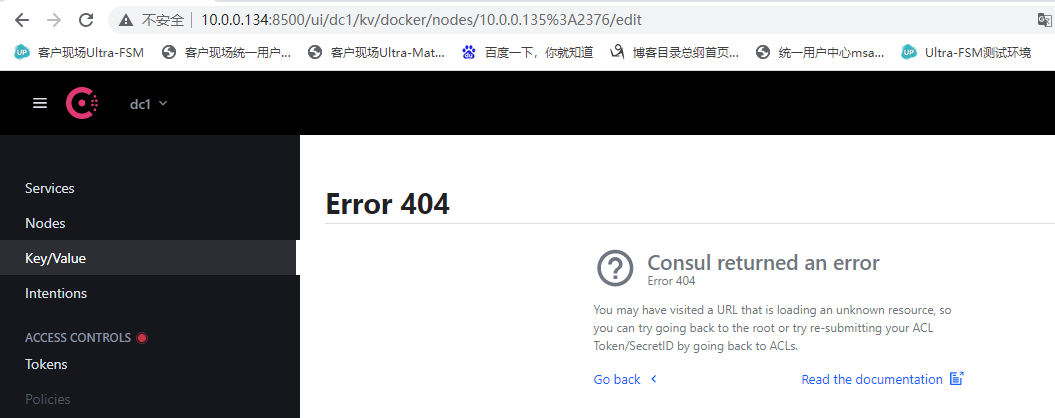

点进去135

[root@mcw4 ~]$ docker network create -d overlay mcw_ov

Error response from daemon: This node is not a swarm manager. Use "docker swarm init" or "docker swarm join" to connect this node to swarm and try again.

解决方案:

执行:docker swarm init

报错:

[root@mcw4 ~]$ docker swarm init

Error response from daemon: could not choose an IP address to advertise since this system has multiple addresses on different interfaces (10.0.0.134 on ens33 and 172.16.1.134 on ens37) - specify one with --advertise-addr

解决方案: 加参数指定网卡

[root@mcw4 ~]$ docker swarm init --advertise-addr ens33

Swarm initialized: current node (shd9o6v628yuodd068hhqtior) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-2x07y8n9ifzgvh2qbxv07xjjoyivowzxn65vhhk00x5a81vcd3-112p82a3lj77jy1s04n2n156f 10.0.0.134:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

还有提示信息,接着做吧

[root@mcw4 ~]$ docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-2x07y8n9ifzgvh2qbxv07xjjoyivowzxn65vhhk00x5a81vcd3-aios5d88jiiq7ikgl44erbuno 10.0.0.134:2377

[root@mcw4 ~]$

应该是不用接着做了

[root@mcw4 ~]$ docker swarm join --token SWMTKN-1-2x07y8n9ifzgvh2qbxv07xjjoyivowzxn65vhhk00x5a81vcd3-aios5d88jiiq7ikgl44erbuno 10.0.0.134:2377

Error response from daemon: This node is already part of a swarm. Use "docker swarm leave" to leave this swarm and join another one.

[root@mcw4 ~]$ docker network create -d overlay mcw_ov #成功创建网络

rt8w4u0kjsrkmnf6t34ququwg

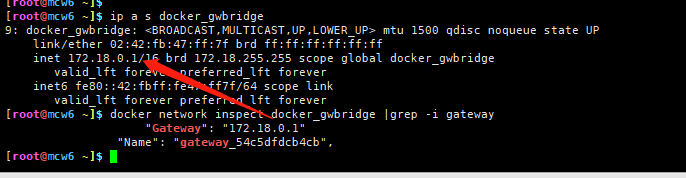

[root@mcw4 ~]$ docker network ls #查看网络,mcw_ov这个是我创建的,这个docker_gwbridge不知啥时候创建的,但是是相关的

NETWORK ID NAME DRIVER SCOPE

10494d6bd248 bridge bridge local

80955ec61abc docker_gwbridge bridge local

52042c49021b host host local

r9ugyxc34mrm ingress overlay swarm

rt8w4u0kjsrk mcw_ov overlay swarm

fe4771ca21b4 none null local

[root@mcw4 ~]$ docker network inspect mcw_ov #查看我创建的overlay网络mcw_ov

[

{

"Name": "mcw_ov",

"Id": "rt8w4u0kjsrkmnf6t34ququwg",

"Created": "2022-01-02T09:43:13.815459229Z",

"Scope": "swarm",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "10.0.1.0/24",

"Gateway": "10.0.1.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": null,

"Options": {

"com.docker.network.driver.overlay.vxlanid_list": "4097"

},

"Labels": null

}

]

[root@mcw4 ~]$ docker network inspect docker_gwbridge #查看另一个网络

[

{

"Name": "docker_gwbridge",

"Id": "80955ec61abcca7ddd3a99323758c21ce142c050d2c3384e0245d923ed638611",

"Created": "2022-01-02T17:40:02.262740556+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"ingress-sbox": {

"Name": "gateway_ingress-sbox",

"EndpointID": "119f4bb7ff7127b6f724fcf185efa8d61ac5d399e26ec170cb2721d925dace1d",

"MacAddress": "02:42:ac:12:00:02",

"IPv4Address": "172.18.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.enable_icc": "false",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.name": "docker_gwbridge"

},

"Labels": {}

}

]

[root@mcw4 ~]$ 复制

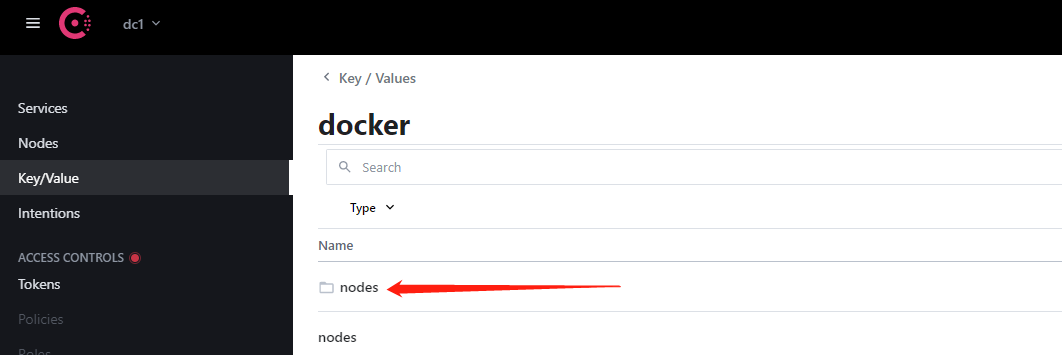

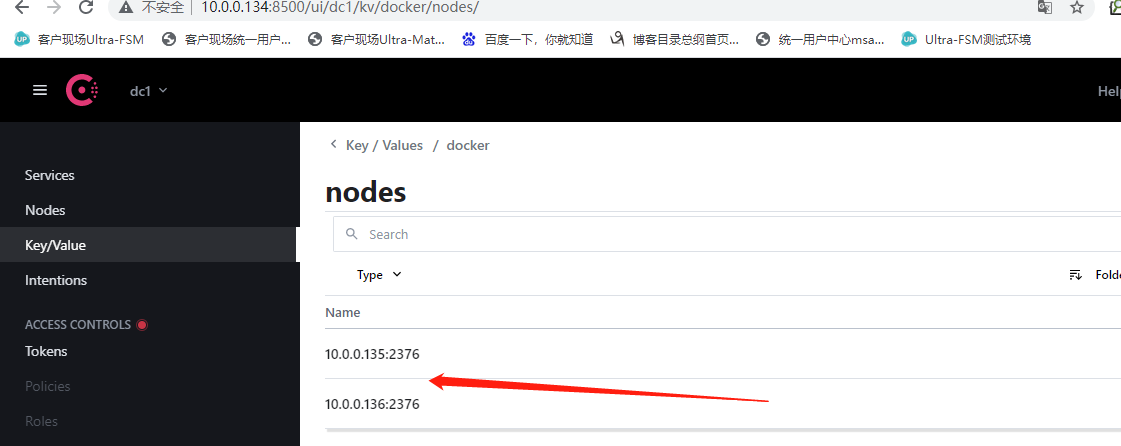

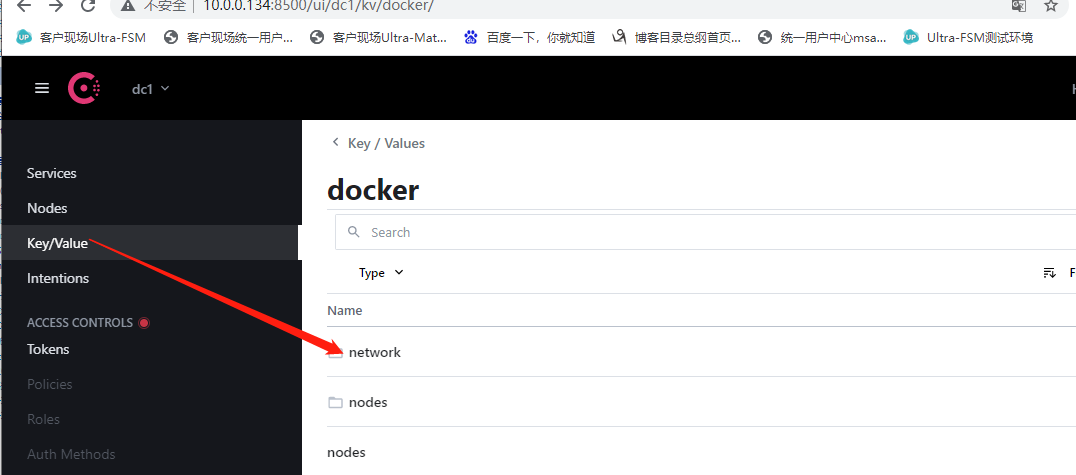

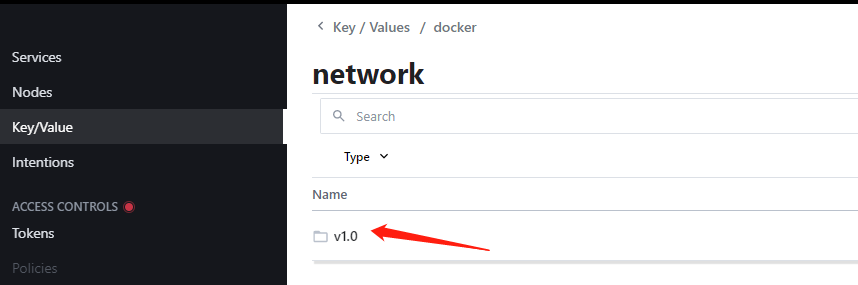

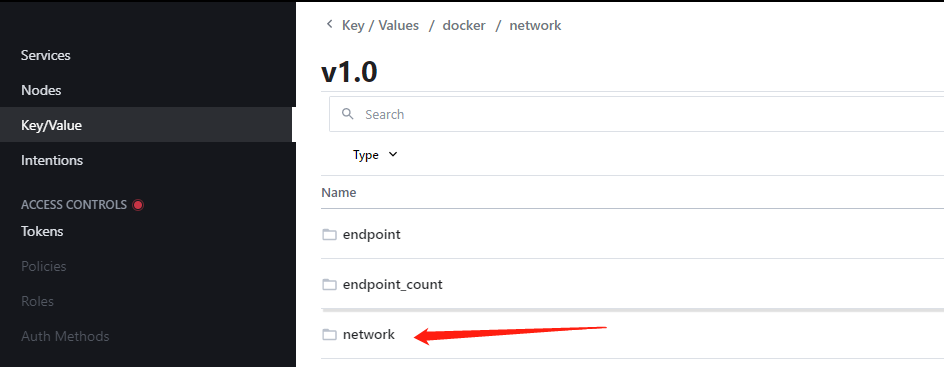

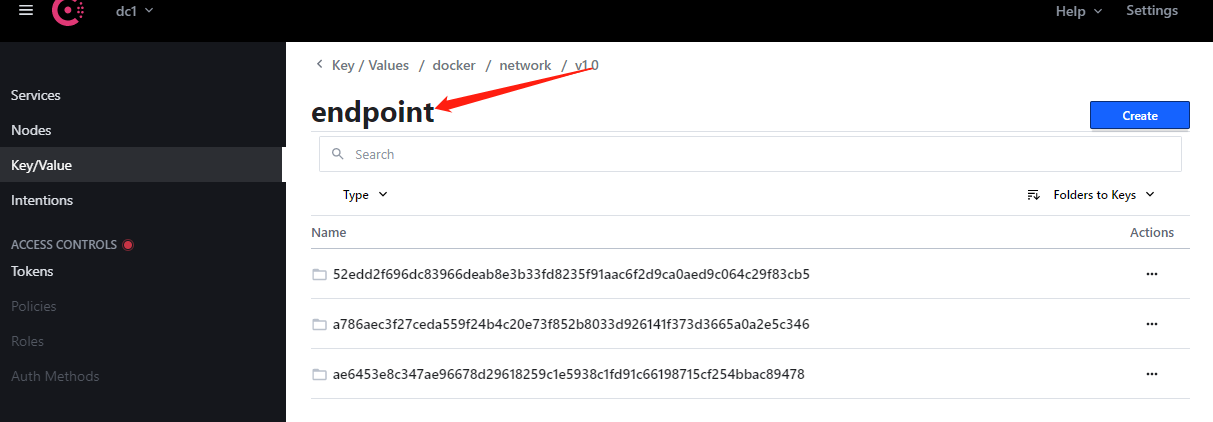

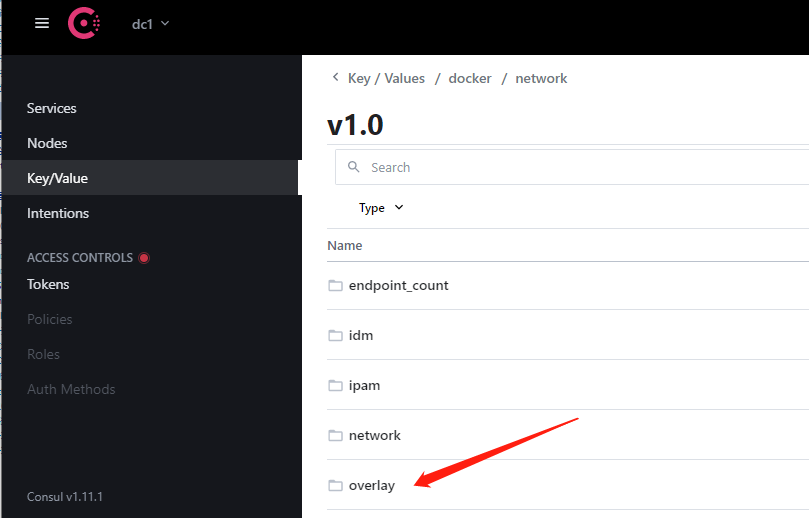

键值对中可以看到多出来的网络

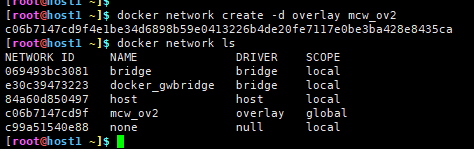

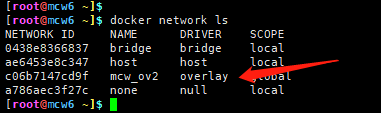

在host1上创建一个overlay网络,显示是全局的,-d指定网络类型为overlay

然后在host2上查看网络,发现多了这个在host1创建的网络。再往上一个的网络创建是在consul上创建的。host2从consul读取到了host1创建的新网络数据,之后的任何变化,都会同步到host1,2

consul页面键值对那里好像又多东西了,

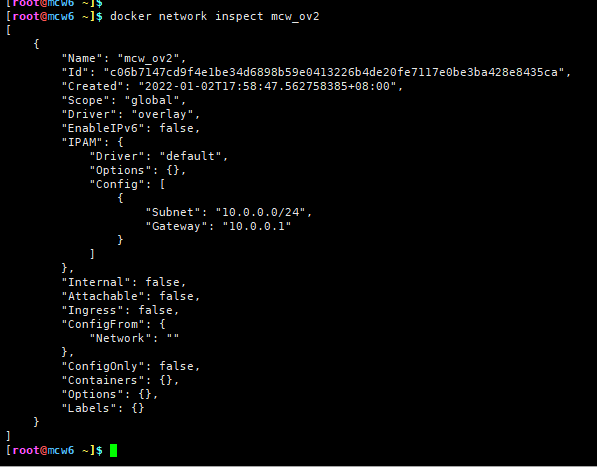

在host2上查看这个网络的详细信息

IPAM是ip地址管理,docker自动为这个网络分配的ip空间为10.0.0.0/24

前面在host1(mcw5)上创建了overlay网络了,并且在host2(mcw6)上能查看到这个网络,网络显示是global

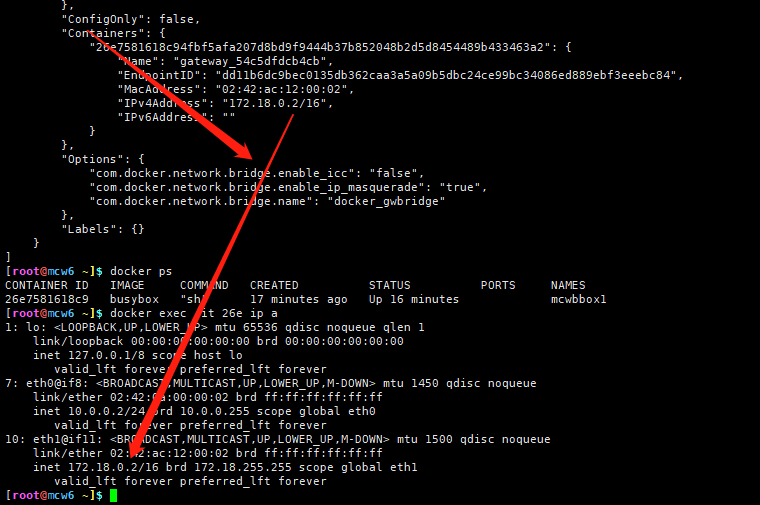

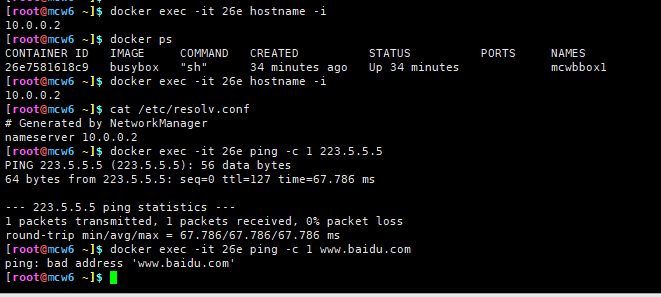

现在在host2上创建运行一个容器

[root@mcw6 ~]$ docker network ls #host1上创建好overlay网络后,host2能查到这网络, NETWORK ID NAME DRIVER SCOPE #但此时并没看到docker_gwbridge这个网络生成 473bc155c97e bridge bridge local ae6453e8c347 host host local c06b7147cd9f mcw_ov2 overlay global a786aec3f27c none null local [root@mcw6 ~]$ docker run -itd --name mcwbbox1 --network mcw_ov2 busybox #以创建的overlay网络运行一个容器 Unable to find image 'busybox:latest' locally latest: Pulling from library/busybox 5cc84ad355aa: Pull complete Digest: sha256:5acba83a746c7608ed544dc1533b87c737a0b0fb730301639a0179f9344b1678 Status: Downloaded newer image for busybox:latest 26e7581618c94fbf5afa207d8bd9f9444b37b852048b2d5d8454489b433463a2 [root@mcw6 ~]$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 26e7581618c9 busybox "sh" About a minute ago Up 48 seconds mcwbbox1 [root@mcw6 ~]$ docker network ls #当host2上用这个网络运行一个容器后,就存在docker_gwbridge这个网络了 NETWORK ID NAME DRIVER SCOPE 473bc155c97e bridge bridge local f3a7e89aa10e docker_gwbridge bridge local #docker会创建一个桥接网络docker_gwbridge,为所有连接到overlay网络 ae6453e8c347 host host local #的容器提供访问外网的能力 c06b7147cd9f mcw_ov2 overlay global a786aec3f27c none null local [root@mcw6 ~]$复制

[root@mcw6 ~]$ docker network inspect mcw_ov2

[

{

"Name": "mcw_ov2",

"Id": "c06b7147cd9f4e1be34d6898b59e0413226b4de20fe7117e0be3ba428e8435ca",

"Created": "2022-01-02T17:58:47.562758385+08:00",

"Scope": "global",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "10.0.0.0/24",

"Gateway": "10.0.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"26e7581618c94fbf5afa207d8bd9f9444b37b852048b2d5d8454489b433463a2": {

"Name": "mcwbbox1",

"EndpointID": "cf63df6e9d160d3debdcffd6ae6745ab8d50f651d1ca4998d5b60e6f2b50f9a0",

"MacAddress": "02:42:0a:00:00:02",

"IPv4Address": "10.0.0.2/24",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

[root@mcw6 ~]$ docker network inspect docker_gwbridge

[

{

"Name": "docker_gwbridge",

"Id": "f3a7e89aa10ecf78a6b5d602ec7c79471200abb536ae40ddec1ba834dd03f5c9",

"Created": "2022-01-02T18:17:38.506568799+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"26e7581618c94fbf5afa207d8bd9f9444b37b852048b2d5d8454489b433463a2": {

"Name": "gateway_54c5dfdcb4cb",

"EndpointID": "dd11b6dc9bec0135db362caa3a5a09b5dbc24ce99bc34086ed889ebf3eeebc84",

"MacAddress": "02:42:ac:12:00:02",

"IPv4Address": "172.18.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.enable_icc": "false",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.name": "docker_gwbridge"

},

"Labels": {}

}

]

[root@mcw6 ~]$ 复制

这样容器就可以通过这个网桥访问外网

如果外网要访问容器,可以通过主机端口映射,比如

docker run -p 80:80 -d --net mcw_ov --name web1 httpd

这里网络可以通外网的,但是容器和宿主机 的域名服务ip冲突了,可能是这个导致无法解析域名

echo "net.ipv4.ip_forward = 1">>/etc/sysctl.conf

sysctl -p

由下可知,overlay网络中的容器可以直接通信,docker也实现了DNS服务

[root@mcw6 ~]$ docker run -itd --name mcwbox61 --network mcw_ov2 busybox #以overlay网络在host2上创建容器mcwbox61 7b2a29d00efd2501de6505eb663bd83f7aa6a38adaca341934ddca3ed4480593 [root@mcw6 ~]$ docker exec mcwbox61 ip r default via 172.18.0.1 dev eth1 10.0.0.0/24 dev eth0 scope link src 10.0.0.3 172.18.0.0/16 dev eth1 scope link src 172.18.0.3 [root@mcw6 ~]$ [root@mcw6 ~]$ docker run -itd --name mcwbox62 --network mcw_ov2 busybox #以overlay网络在host2上创建容器mcwbox62 76fc1a6d0fac9eff697bce3b360fb0c92c84c1149c83d8f89e5a8f9517837369 [root@mcw6 ~]$ docker exec mcwbox62 ip r default via 172.18.0.1 dev eth1 10.0.0.0/24 dev eth0 scope link src 10.0.0.4 172.18.0.0/16 dev eth1 scope link src 172.18.0.4 [root@mcw6 ~]$ docker exec mcwbox62 ping -c 1 mcwbox61 #同主机,同overlay网络直接网络是连通的,可通过dns服务互访 PING mcwbox61 (10.0.0.3): 56 data bytes 64 bytes from 10.0.0.3: seq=0 ttl=64 time=0.311 ms --- mcwbox61 ping statistics --- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 0.311/0.311/0.311 ms [root@mcw6 ~]$复制

[root@mcw5 ~]$ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES [root@mcw5 ~]$ [root@mcw5 ~]$ docker run -itd --name mcwbox51 --network mcw_ov2 busybox #以overlay网络在host1上创建容器mcwbox51 a4c6674fae1696df44e125108238a1758f4196683cb96e1e889784e59bd39f37 [root@mcw5 ~]$ docker exec a4c ip r #查看容器ip default via 172.18.0.1 dev eth1 10.0.0.0/24 dev eth0 scope link src 10.0.0.5 172.18.0.0/16 dev eth1 scope link src 172.18.0.2 [root@mcw5 ~]$ docker exec a4c ping -c 2 10.0.0.4 #检验host1中容器到host2中容器mcwbox62的连通性,通过ip PING 10.0.0.4 (10.0.0.4): 56 data bytes 64 bytes from 10.0.0.4: seq=0 ttl=64 time=1065.117 ms 64 bytes from 10.0.0.4: seq=1 ttl=64 time=65.441 ms --- 10.0.0.4 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 65.441/565.279/1065.117 ms [root@mcw5 ~]$ docker exec a4c ping -c 2 mcwbox61 #检验host1中容器到host2中容器mcwbox61的连通性,通过主机名 PING mcwbox61 (10.0.0.3): 56 data bytes 64 bytes from 10.0.0.3: seq=0 ttl=64 time=1003.939 ms 64 bytes from 10.0.0.3: seq=1 ttl=64 time=3.240 ms --- mcwbox61 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 3.240/503.589/1003.939 ms复制

[root@mcw6 ~]$ ip netns #执行命令查看docker网络命令空间,由于没有执行下面的软链接,所以没有结果

[root@mcw6 ~]$ ln -s /var/run/docker/netns /var/run/netns #添加软链接

[root@mcw6 ~]$ ip netns #然后执行命令,查看docker命名空间,

2415736b51bf (id: 3)

23437c6848c8 (id: 2)

54c5dfdcb4cb (id: 1)

1-c06b7147cd (id: 0) #这个是mcw_ov2的namespace,这里host2中查看,也可以在host1中查看到这个namespace

[root@mcw6 ~]$ ip netns exec 1-c06b7147cd brctl show #没装命令,需要安装包bridge-utils

exec of "brctl" failed: No such file or directory

[root@mcw6 ~]$ ip netns exec 1-c06b7147cd brctl show #查看docker为overlay网络

bridge name bridge id STP enabled interfaces #创建的独立的网络命名空间,其中有

br0 8000.1a743dca37a1 no veth0 #一个就是bridge bro,endpoint由veth pair实现,

· veth1 #一端链接到容器中(即eth0),另一端连接到

veth2 #br0上,br0除了连接所有endpoint,还会连接一个vxlan设备

vxlan0 #用于与其它host建立vxlan tunnerl,容器直接就是通过这个tunnel通信

[root@mcw6 ~]$ ip netns exec 1-c06b7147cd ip -d l show vxlan0 #查看这个网络namespace中br0上的设备

6: vxlan0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br0 state UNKNOWN mode DEFAULT

link/ether ea:17:50:46:d6:e6 brd ff:ff:ff:ff:ff:ff link-netnsid 0 promiscuity 1

vxlan id 256 srcport 0 0 dstport 4789 proxy l2miss l3miss ageing 300 #其中含有vxlan id

bridge_slave state forwarding priority 32 cost 100 hairpin off guard off root_block off fastleave off learning on flood on port_id 0x8001 port_no 0x1 designated_port 32769 designated_cost 0 designated_bridge 8000.1a:74:3d:ca:37:a1 designated_root 8000.1a:74:3d:ca:37:a1 hold_timer 0.00 message_age_timer 0.00 forward_delay_timer 0.00 topology_change_ack 0 config_pending 0 proxy_arp off proxy_arp_wifi off mcast_router 1 mcast_fast_leave off mcast_flood on addrgenmode eui64

[root@mcw6 ~]$ docker ps -a #查看这台host上有哪些容器

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

76fc1a6d0fac busybox "sh" 52 minutes ago Up 52 minutes mcwbox62

7b2a29d00efd busybox "sh" 54 minutes ago Up 54 minutes mcwbox61

26e7581618c9 busybox "sh" 2 hours ago Up 2 hours mcwbbox1复制

[root@mcw5 ~]$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES a4c6674fae16 busybox "sh" 2 hours ago Up 2 hours mcwbox51 [root@mcw5 ~]$ docker network create -d overlay mcw_ov3 #在host1上创建新的网络mcw_ov3, 0ca479e2cef7f21697f2a829bb2ccdcc7098f9a088bf6d5bf72959b0d30848ae [root@mcw5 ~]$ docker run -itd --name mcwbox52 --network mcw_ov3 busybox #以新网络运行容器 eedf850604b4f7a52ca241fa4e20502d014224a1e2d49f74c3d80a1cfb202201 [root@mcw5 ~]$ docker exec -it mcwbox52 ip r #查看容器mcwbox52分配的ip10.0.1.2 default via 172.18.0.1 dev eth1 10.0.1.0/24 dev eth0 scope link src 10.0.1.2 172.18.0.0/16 dev eth1 scope link src 172.18.0.3 [root@mcw5 ~]$ docker exec -it mcwbox52 ping -c 2 10.0.0.3 #从这个第二个overlay下的容器ping第一个overlay下的容器, PING 10.0.0.3 (10.0.0.3): 56 data bytes #网络不通。验证了不同overlay下网络是不通的 --- 10.0.0.3 ping statistics --- 2 packets transmitted, 0 packets received, 100% packet loss [root@mcw5 ~]$ [root@mcw5 ~]$ docker network connect mcw_ov2 mcwbox52 #如果想让第二个overlay下的容器mcwbox52能通第一个overlay下的容器 [root@mcw5 ~]$ docker exec -it mcwbox52 ping -c 2 10.0.0.3 #那么使用上面的连接命令,即可实现打通网络 PING 10.0.0.3 (10.0.0.3): 56 data bytes 64 bytes from 10.0.0.3: seq=0 ttl=64 time=34.884 ms ^C --- 10.0.0.3 ping statistics --- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 34.884/34.884/34.884 ms [root@mcw5 ~]$ docker exec -it mcwbox52 ping -c 1 mcwbox62 #ping主机名 PING mcwbox62 (10.0.0.4): 56 data bytes 64 bytes from 10.0.0.4: seq=0 ttl=64 time=42.705 ms --- mcwbox62 ping statistics --- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 42.705/42.705/42.705 ms [root@mcw5 ~]$复制

指定ip空间

docker network create -d overlay --subnet 10.22.1.0/24 ov_net3