Every organization faces a war between two schools of thought, with “If it ain’t broke, don’t fix it” on one side and “release early and often” on the other. This blog post looks for a way for enterprise consumers to embrace fast-paced changes in the software industry.

Today’s software industry feels like a George Carlin Top 40 radio DJ spoof about a song recorded at nine AM, rocketed to number one on the charts by 1 PM, and by 5 PM it's a golden oldie. Software producers generally force consumers onto a never-ending treadmill of change, and consumers are exhausted.

Pain from change is real. But it’s not new.

In 1980, when I was a junior system administrator at a small engineering college, a not-so-brilliant idea weaseled its way into my head. The circuit boards inside the refrigerator-sized system I managed were nasty and I decided to vacuum them. The job was simple: pull a board, vacuum it, vacuum its backplane slot and reseat it. I vacuumed dozens of boards and by the time I finished, that system was the cleanest it had been since it was new. Unfortunately, it would not power on.

I called vendor field service, our support technician brought in a second level support technician, who brought in a third-level support technician and before long, an army of support technicians with oscilloscopes, meters, alligator clips, cables and other diagnostic equipment pored over every inch of that system while my users twiddled their thumbs.

It took a few days, but they finally brought it back to life. And then Terry, our field service technician, pointed his finger at me and said, “Greg, from now on, if it ain’t broke, don’t fix it!”

I will never forget that life lesson.

But balance is everything in life.

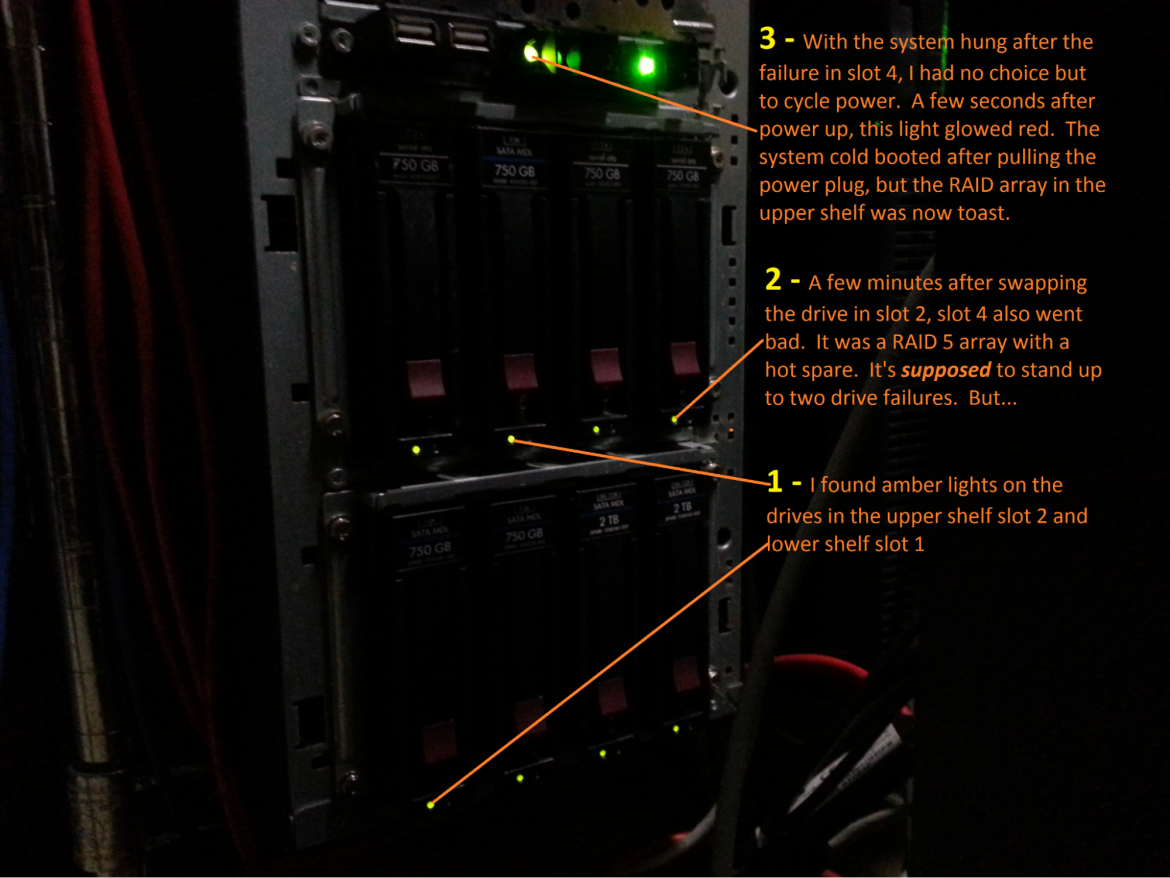

In December, 2014, I operated a virtual machine infrastructure with a file/print server, email server, web server and a few other virtual servers, all centered around an NFS server with RAID sets of HP 750 GB SATA drives I bought cheap from eBay. And on Saturday, December 20, 2014, I experienced what I call my Apollo 13 moment.

I walked downstairs around 5:30 PM and noticed two of my 750 GB drives displayed amber fault lights. I had spares, so I swapped the failed drives and the RAID sets began rebuilding while I drove to Applebees to pick up our family dinner. When I got back home, I found another drive had failed. My RAID 10 set was dead and so was every virtual server I had ever built. My family ate dinner without me. I ate my dinner after midnight.

I walked downstairs around 5:30 PM and noticed two of my 750 GB drives displayed amber fault lights. I had spares, so I swapped the failed drives and the RAID sets began rebuilding while I drove to Applebees to pick up our family dinner. When I got back home, I found another drive had failed. My RAID 10 set was dead and so was every virtual server I had ever built. My family ate dinner without me. I ate my dinner after midnight.

I recovered. It wasn’t easy. I had good backups for my file/print and email servers. But I had never backed up my new web server, so I had to rebuild that VM from scratch. I will always be grateful to Brewster Kahle’s mom for recording every news program she could get in front of from sometime in the 1970s until her death. She inspired Brewster Kahle to build web.archive.org, and his wayback machine had copies of nearly all my web pages and pointers to some supporting pictures and videos. I was back online shortly after midnight Christmas morning. My jet-lag and stress headache faded before New Years day.

That near-disaster was my fault. I caused it. Those 750 GB drives were cheap because they had a firmware problem. Sometimes they took themselves offline for no reason. An update was available, but it was a hassle to apply, I had run them for a long time, they weren’t broken, and so I didn’t fix them. I took on an insane amount of foolish risk and it almost shut me down.

Sound familiar? Customers often ask me, why pour time into updates when what I have runs just fine? The answer is that a bug in your old version might assert itself at the worst possible time and force you to forego sleep for the next five days to recover. And you’ll be on your own because nobody in your chain of support will remember the quirks with your old version when it fails.

There’s more.

When Digital Equipment Corporation (DEC) produced VAX/VMS in 1975, it promised upcoming VMS versions would retain compatibility with old versions forever. DEC kept its promise. Even in the 1990s, it ran demos of then-new systems running the same binaries as the original VAX 11/780. But that promise cost DEC dearly, because VMS was not able to adapt to newer and faster hardware in time, and DEC ultimately lost everything to more nimble competitors. Today DEC is a footnote in a dustbin of history, and ex-DEC people still grumble about what could have been, if only…

Accumulating technical debt is like borrowing money from a predatory lender. The interest rate never goes down. But too many consumers condition themselves to accept it because everyone hates change.

Here is a sample of customer feedback about change.

“Fail fast, fail forward doesn’t work here.”

“If we had people for testing and labs, we would not have issues.”

“Our risk tolerance is zero.”

“We cannot cause sev 1 and sev 2 incidents.”

“We have even more visibility on operational excellence now than ever.”

“Changes must be well planned and thought out.”

“We have to work around network moratoriums and fit everything into the calendar.”

And my favorite, paraphrased with language less colorful than the original statement:

"You would be mad if you had to buy a new refrigerator every 18 months, especially if that new refrigerator doesn’t give you anything new."

Here is feedback on that customer feedback.

Fail fast, fail forward works if you do it early.

Zero risk is a myth. Reduce risk by embracing progress and failing fast and early.

Avoid production sev 1 and sev 2 incidents by baking new ideas in an innovation pipeline.

Innovation pipelines continuously improve operational excellence by…

implementing well planned and thought out changes,

according to a predictable schedule.

IT infrastructures are not commodity refrigerators. Nobody launches cyberattacks against ordinary refrigerators. IOT refrigerators are different. They offer little websites connected to the public internet. Keep them updated or face ugly consequences.

Today, I see customers with complex layers of software bandages that would make Rube Goldberg cringe, all to retain compatibility with obsolete processes and equipment, all in the name of stability. But that so-called stability can be an illusion. Sooner or later, it will either fail and cause a disaster, or maintaining it will hamper progress so much that the organization could face obsolescence.

To thrive in today’s world of constant change, consumers must become agile. Cyberattackers constantly probe for weaknesses, competition for customers is fierce and software vendors release bug fixes and new capabilities at a torrid pace. Remaining static does not reduce risk. Remaining static really means accepting more risk as systems grow obsolete.

But agility also comes with risk. Upgrades are rarely smooth, new versions often introduce new bugs, and infrastructure instability can generate ugly headlines and sometimes cost fines. Or worse. What would happen if a 911 call system, or a power grid, or a critical medical system fails? When the systems that modern society takes for granted fail, chaos often follows.

We can’t remain static. We can’t move forward. What’s the answer?

Embrace innovation by making it an integral part of business operations. This means rethinking everything. Instead of multi-year test cycles and massive upgrade efforts that disrupt everyone, embrace a never-ending stream of incremental improvements that prove themselves in a series of ever-more sophisticated lab environments. As potential improvements pass through integration stages, subject them to automated tests with realistic synthetic workloads. And then, by the time they’re production-ready, bugs should be fixed because they’ve passed through a gauntlet of high-quality testing.

I call this an innovation pipeline. Here is the vision.

It starts with small innovation projects across the organization.

Most fail fast.

But a few…

make it into a first stage integration lab,

where more fail fast.

Survivors go to a QA lab,

where testing teams find and fix integration problems.

Then they go to a pre-production lab,

where testing teams use realistic synthetic workloads to find and fix scale problems.

And eventually into production,

all on a predictable schedule.

Implementing this vision solves problems on both sides of the “release early and often” vs. “don’t fix it if it ain’t broke” scale. It’s a mindset change across the organization. It might require up-front investment, but doesn’t need to cost a fortune. The payoff is future technical debt reduction and associated interest savings on that debt both in measurable dollars and opportunity costs.

Let Red Hat help assess where you are now and position you to embrace progress.

To learn more, check out this two minute video about Red Hat Open Innovation Labs. This is more than just a virtual sandbox somewhere on the internet. It’s an immersive residency, pairing your engineers with open source experts, transforming your ideas into business outcomes. And be sure to download a free ebook with more. Or contact Red Hat Services for consulting assistance.

Even if “it ain’t broke,” you can still improve it. Your customers will like it. Your competitors won’t. Never stop challenging yourself.