If you are thinking that you are having flashbacks as Intel is launching the “Sapphire Rapids” server CPUs – the company’s fourth generation in the Xeon SP family of server processors – you are not alone. We have been waiting for this launch for the better part of a year, Intel has been teasing out details about the chips for even longer, and many of the hyperscalers and cloud builders have been buying them and deploying them for many months. So it may feel like we already did this.

But today is the official launch of the Sapphire Rapids Xeon SPs, which are a significant improvement over the 10 nanometer “Ice Lake” Xeon SPs launched for only two socket servers that debuted in April 2021; there was no version of Ice Lake for four-socket and eight-socket servers, and instead Intel asked customers to stand pat with the 14 nanometer “Cooper Lake” generation, which was a follow-on to the 14 nanometer “Cascade Lake” Xeon SPs that originally launched in April 2019 and were updated in February 2020 with some nominal price and performance enhancements as Intel’s 10 nanometer manufacturing for the Ice Lake CPUs stalled.

The refined 10 nanometer SuperFIN manufacturing process, which the chip maker now calls Intel 7, has been problematic to a certain extent as well, which is why the 60-core Sapphire Rapids CPUs find themselves in the uncomfortable position of competing against AMD’s 96-core “Genoa” Epyc 9004 processors launched in November 2022 instead of the original target, which was the 64-core “Milan” Epyc 7003s that came out in March 2021.

“You go to war with the army you have,” as Donald Rumsfeld, former Secretary of Defense, once wisely said. And with its much larger manufacturing capability, Intel has played the poor manufacturing hand it dealt itself many years ago through lack of investment and missed technology milestones about as well as anyone could imagine. That Intel still commands more than 70 percent of the share of the compute engines in the X86 server market is a testament to what we have been calling supply wins, which is a different thing from design wins based on chip features. A supply win is based on the fact that the best performing chip is the one you can actually buy and install in your datacenter.

The interesting bit is just as AMD is getting its substrate supplies in order to allow it to ramp up more production on its Epyc processors, Intel is adding new architectural features to the Xeon SP platform – the AMX matrix math units, lots of other accelerators, and HBM memory as an option for the CPU come immediately to mind – even at the same time it is improving its manufacturing story. The net-net is that this is, despite appearances, still a competitive situation. And there is a chance that within a few years Intel could reach parity in terms of features and manufacturing with AMD and its foundry partner, Taiwan Semiconductor Manufacturing Co.

But that day, even with the huge improvement that the Sapphire Rapids chips offer over the Ice Lake and Cooper Lake chips that they replace, is not today. (With some notable exceptions on the CPU-only HPC and AI front and on servers with more than two processors.)

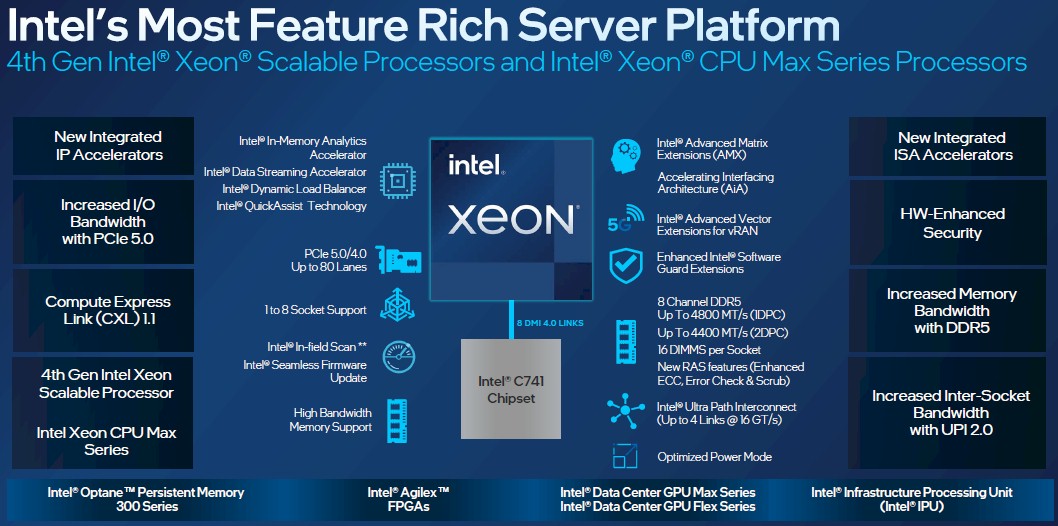

The top brass at Intel say that this is the “most feature rich server platform” in the company’s history, and that is without question true. The Sapphire Rapids chips support DDR5 main memory running at 4.8 GHz and 4.4 GHz across eight memory channels, the former when there is one memory stick per channel and the latter when there are two memory sticks per channel. The CPUs also support PCI-Express 5.0 peripherals across 80 I/O lanes, and have integrated NUMA electronics with faster UltraPath Interconnect (UPI) links that run at 16 GT/sec, which is 43 percent faster than the 11.2 GT/sec UPI links in the Ice Lake chips. The 64 GB HBM2e stacked memory option, which provides 1.2 TB/sec of memory bandwidth on the Max Series GPUs, which we have discussed at length, allows for memory bound applications to run about 2X faster than on standard Sapphire Rapids parts and will absolutely give the AMD Milan-X and Genoa chips a run for the HPC money for memory bandwidth bound applications.

There are lots of new features and architecture changes with the Sapphire Rapids lineup, which we will get into with our usual deep dive following this initial launch coverage, but perhaps the most important one is that this is the first time since the Cascade Lake lineup four years ago that Intel is fielding a complete Xeon SP generation, top to bottom, with myriad SKU variants for all kinds of disparate workloads. This is a real and complete product line, unlike Ice Lake and Cooper Lake, which got all bunched up because of Intel’s manufacturing woes and cut up and pieced together in ways never intended. There are a whopping 52 standard parts in the Sapphire Rapids generation of Xeon SPs, with all kinds of features turned on and off to chase those different workloads with a vengeance.

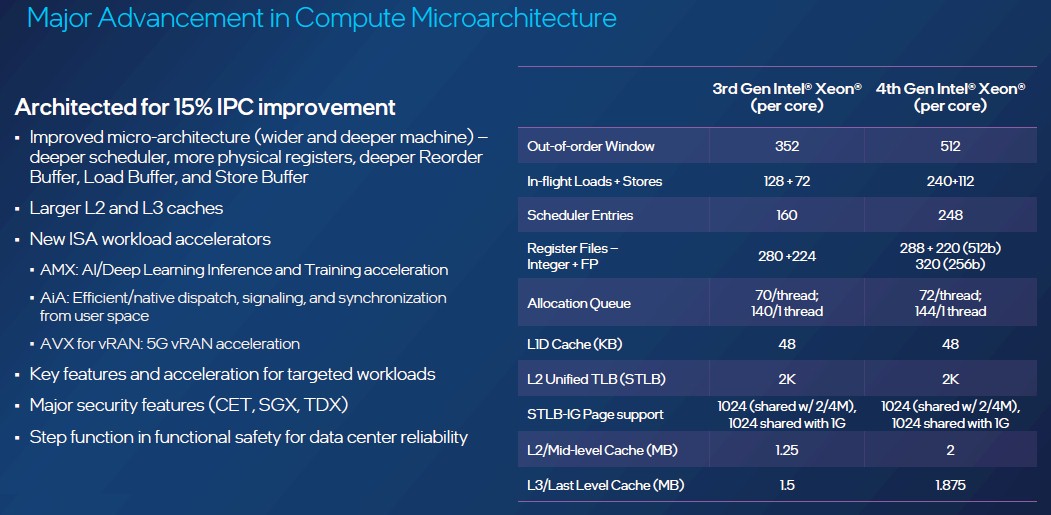

With the Sapphire Rapids chips, Intel is cranking up the processing capacity of the core as well as boosting the number of cores on the CPU package. The “Golden Cove” performance core (or P-Core as Intel calls it) can handle about 15 percent more instructions per clock compared to the “Sunny Cove” core used in the Ice Lake server chip. The top bin Sapphire Rapids chip has 60 cores, which is a 50 percent increase over the 40 cores in the top bin Ice Lake part. The refined 10 nanometer process used for Sapphire Rapids should also allow for maybe 100 MHz to 200 MHz more clock speed, but not the kind of jump you would expect with jumps to 7 nanometers and then to 5 nanometers.

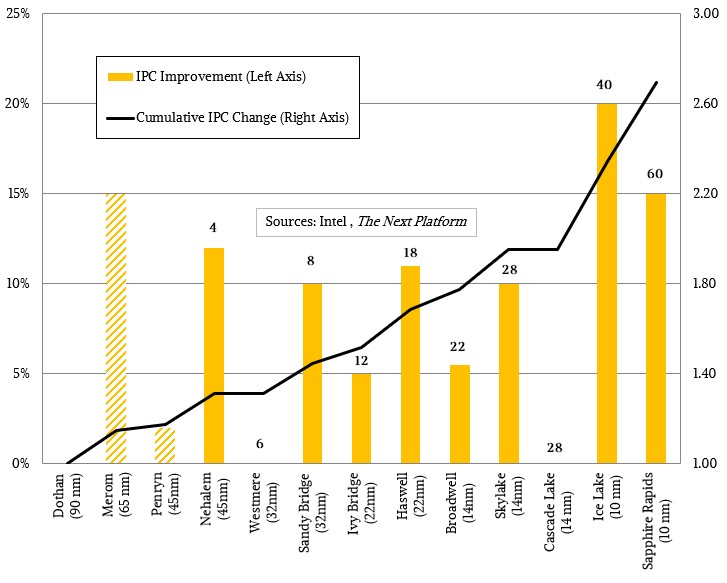

IPC improvement per generation versus cumulative IPC over time. Maximum core count per generation shown above the bars for each Xeon chip.

IPC improvement per generation versus cumulative IPC over time. Maximum core count per generation shown above the bars for each Xeon chip.

As has been the case with other CPU makers, the IPC jumps for core designs has been higher than the general trends in recent years, which is a comforting thing. This means that humans can still do engineering and re-engineering with a certain amount of flair. We have been tracking IPC improvements per core generation since Intel launched the “Nehalem” Xeon processors back in March 2009, when Intel was reckoning IPC changes against the “Dothan” core for PCs implemented in 90 nanometer processes. If you accumulate all of the IPC changes over those thirteen core designs, then IPC normalized for the same clock speed has increased by a factor of 2.7 between Dothan and Sapphire Rapids. If you reckon IPC changes against Nehalem, then the Golden Cove core in Sapphire Rapids is 2.06X faster. Between 2009 and 2023, core counts have increased by a factor of 15X, so overall system performance, if the clock speed could have been held constant at the 2.93GHz of the top bin Nehalem Xeon X5570, would have increased by 30.9X.

Ah, but the clock speed for 60 cores cannot be held anywhere near 3 GHz. Intel, like all other CPU makers, had to give up clock speed to add more cores and therefore increase throughput even as it allowed a server socket to consume 350 watts. The top bin Nehalem Xeon X5570 was rated at 95 watts and the middle bin Xeon E5540 that we use as the touchstone of performance in our Xeon server generation comparisons was an 80 watt part that had four cores running at 2.53 GHz. The top bin Xeon SP-8490H Platinum part in the Sapphire Rapids line has a rating of 23.47 in our relative performance rankings (which is cores times clock speed times IPC relative to the Nehalem core), and the fact that it can only run at a base clock speed of 1.9 GHz is why the relative performance is lower than you might otherwise expect. That Nehalem E5540 cost $1,386 while the Sapphire Rapids Xeon SP-8490H Platinum chip costs $17,000. That is a factor of 12.27X increase in price to drive a 23.47X increase in performance.

When you say it that way, it doesn’t sound so impressive. But that is what happens when Dennard scaling on frequencies stops and Moore’s Law crushes clock speeds down even further in the pursuit of higher throughput.

(We will get into more price/performance analysis in a moment in this story and do a more through generational analysis in a separate story.)

As has been the case every prior generation of Xeon chip, everything inside the core gets wider and more capacious and deeper to get more work done per clock. Here is how the Sunny Cove and Golden Cove cores stack up against each other in the third and fourth generation Xeon SP processors:

It is significant that the L2 caches on each core are 60 percent larger at 2 MB and that the shared L3 cache on the Sapphire Rapids package is 1.875 MB per core (topping out at 112.5 MB across 60 cores), an increase of 25 percent per core. (Again, we will be getting into the Golden Cove core and Sapphire Rapids processor architecture in more detail.)

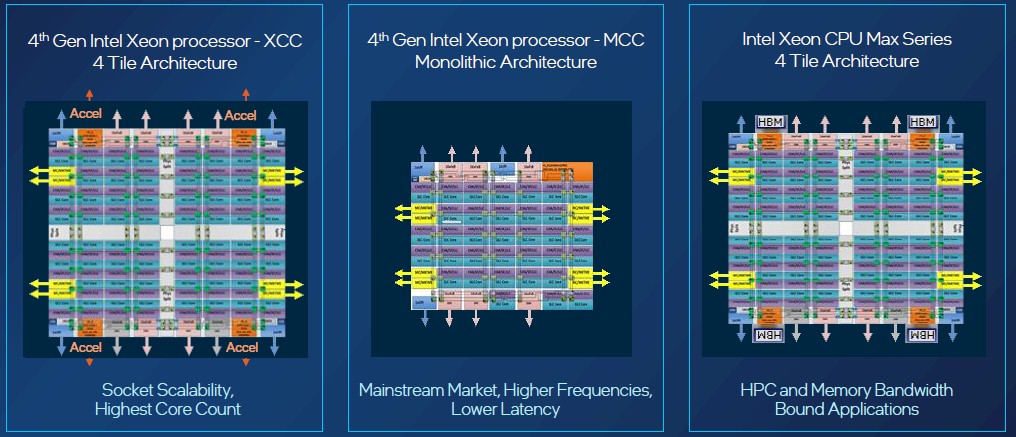

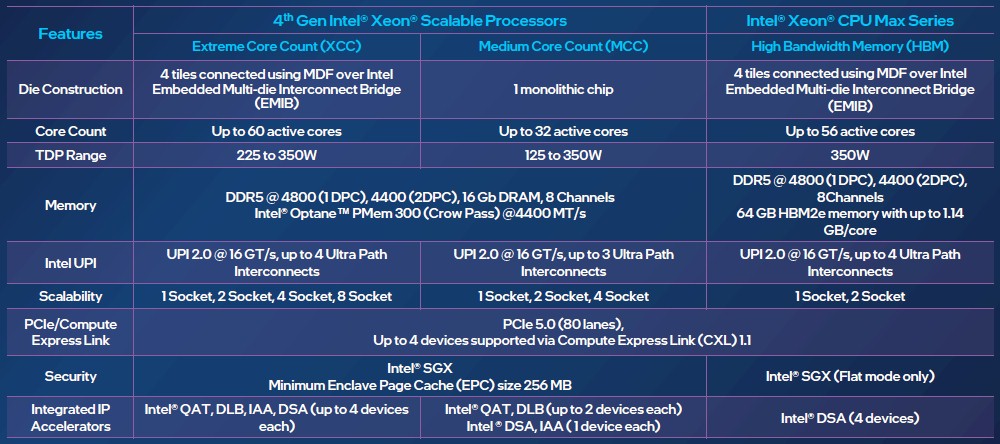

There are actually three different versions of the Sapphire Rapids package, as you can see below:

There is a monolithic die called the MCC, short for Middle Core Count, which has 32 cores maximum. The XCC, or Extreme Core Count, variant has four chiplets interconnected with EMIB links. Each chiplet has 16 cores on it, although Intel is only exposing 15 of these for yield reasons. And truth be told, it looked like the plan was to only expose 56 of them before sense prevailed. Don’t worry – very few people will see the point in buying the 60 core part, given its very high price.

It is a bit of a surprise that there are not 56-core and 52-core versions in the H-Series variants of Sapphire Rapids aimed at databases and heavily virtualized servers. It jumps down to a 48-core version, the Xeon SP-8468H Platinum, which cycles at 2.1 GHz. The top bin Xeon SP-8490H Platinum has a dozen more cores running at a slower 1.9 GHz and delivers 13.1 percent more raw performance, but it costs 22 percent more.

Here are how the three different Sapphire Rapids packages compare in terms of core counts, watt ranges, connectivity, and NUMA capability:

You will note that there is no longer an LCC, or Low Core Count, variant in the Sapphire Rapids lineup, even though there are chips with fewer cores. Intel wants to minimize the number of dies it needs to get through qualification. And if you need lower core counts, there’s always Cascade Lake or Ice Lake to turn to.

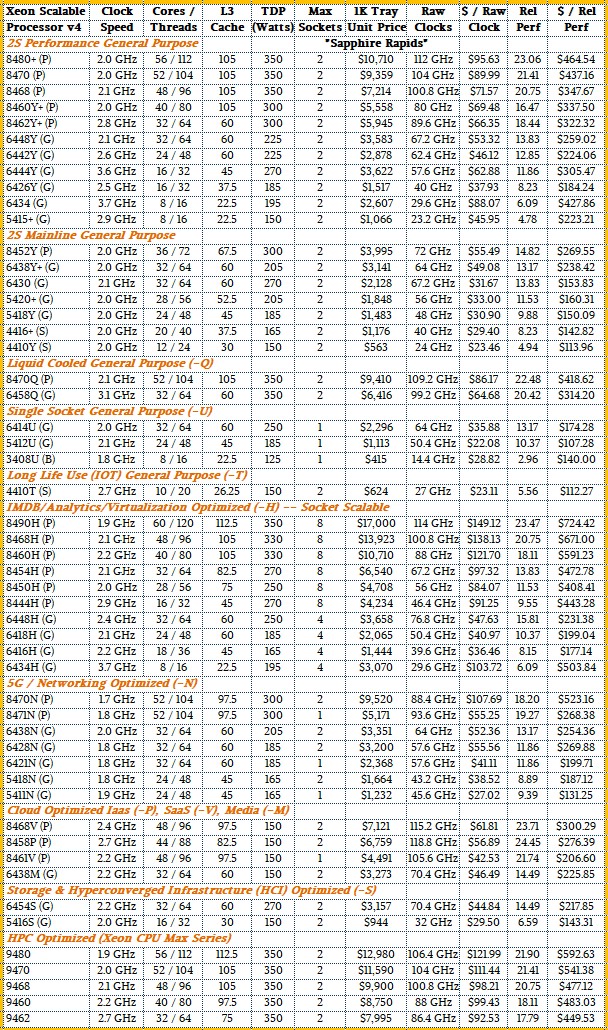

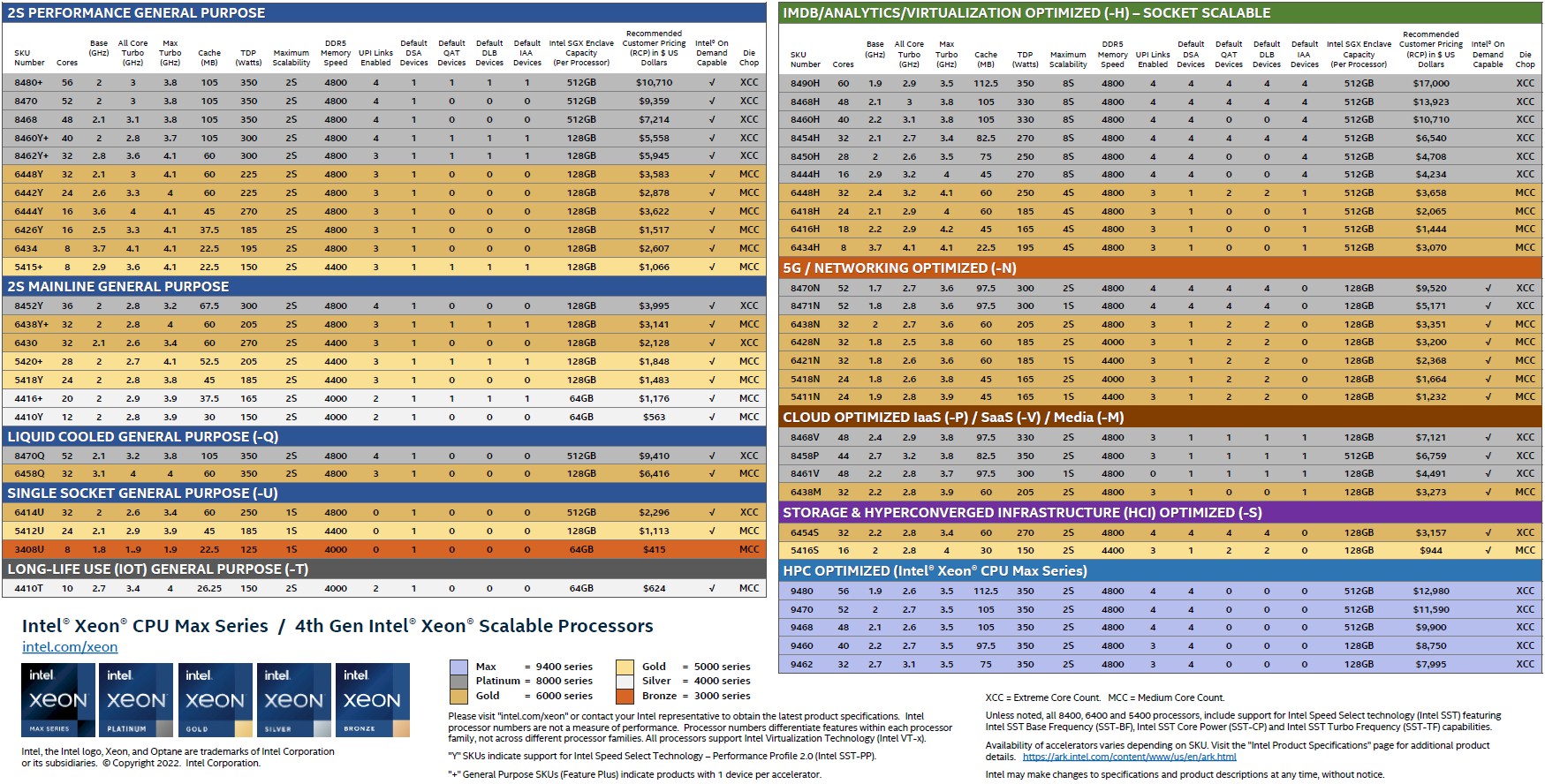

To be helpful, Intel put together a marvelous chart that shows all 52 SKUs in the Sapphire Rapids lineup:

Obviously, click to enlarge this puppy .

Obviously, click to enlarge this puppy .

This will come in very handy as you are trying to figure out what CPUs you need to get into your shop and which CPUs have what features. If a picture is worth a thousand words, this particular one is worth more like 10,000 – it has that much detail crammed into it. All of which is necessary because Intel does not turn all of its features on for all of its SKUs. Intel is putting a value on all of its features, and AMD has been putting a value on eating market share any way it can. When Intel is at parity, and any gains come harder for AMD – or not at all – we shall see how AMD proceeds with regard to features and pricing. We strongly suspect an ever-widening SKU stack and pricing to match the features.

What we always want to know when a new server CPU generation comes out is how these processors stack up against each other, and so without further ado, here is our pricing and performance table for the Sapphire Rapids lineup:

There are so many ways to dice and slice the analysis on the Sapphire Rapids line that the mind boggles a bit. But generally, there is a big premium on chips that can scale to eight sockets, and a pretty big one for those that can scale to four sockets as well. Also, generally speaking, as the core counts rise within a particular family, the cost of the incremental computing added also rises.

The lesson is: Scale, no matter how you get it, always comes at a price – particularly in a post-Moore’s Law semiconductor industry. NUMA scalability has always been expensive, and now it is expensive within the socket as well as across them.

Up next, we will do the architectural deep dive on the Sapphire Rapids Xeon SPs, do a price/performance analysis across the Xeon generations, and take a look at the competitive landscape Intel is selling these chips into.