摘要:这个 notebook 基于论文「Stylized Neural Painting, arXiv:2011.08114.」提供了最基本的「图片生成绘画」变换的可复现例子。

本文分享自华为云社区《基于ModelArts进行图像风格化绘画》,作者: HWCloudAI 。

ModelArts 项目地址:https://developer.huaweicloud.com/develop/aigallery/notebook/detail?id=b4e4c533-e0e7-4167-94d0-4d38b9bcfd63

import os import moxing as mox mox.file.copy('obs://obs-aigallery-zc/clf/code/stylized-neural-painting.zip','stylized-neural-painting.zip') os.system('unzip stylized-neural-painting.zip') cd stylized-neural-painting import argparse import torch torch.cuda.current_device() import torch.optim as optim from painter import * # 检测运行设备 device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") # 配置 parser = argparse.ArgumentParser(description='STYLIZED NEURAL PAINTING') args = parser.parse_args(args=[]) args.img_path = './test_images/sunflowers.jpg' # 输入图片路径 args.renderer = 'oilpaintbrush' # 渲染器(水彩、马克笔、油画笔刷、矩形) [watercolor, markerpen, oilpaintbrush, rectangle] args.canvas_color = 'black' # 画布底色 [black, white] args.canvas_size = 512 # 画布渲染尺寸,单位像素 args.max_m_strokes = 500 # 最大笔划数量 args.m_grid = 5 # 将图片分割为 m_grid x m_grid 的尺寸 args.beta_L1 = 1.0 # L1 loss 权重 args.with_ot_loss = False # 设为 True 以通过 optimal transportation loss 提高收敛。但会降低生成速度 args.beta_ot = 0.1 # optimal transportation loss 权重 args.net_G = 'zou-fusion-net' # 渲染器架构 args.renderer_checkpoint_dir = './checkpoints_G_oilpaintbrush' # 预训练模型路径 args.lr = 0.005 # 笔划搜寻的学习率 args.output_dir = './output' # 输出路径复制

Download pretrained neural renderer.

Define a helper funtion to check the drawing status.

def _drawing_step_states(pt): acc = pt._compute_acc().item() print('iteration step %d, G_loss: %.5f, step_psnr: %.5f, strokes: %d / %d' % (pt.step_id, pt.G_loss.item(), acc, (pt.anchor_id+1)*pt.m_grid*pt.m_grid, pt.max_m_strokes)) vis2 = utils.patches2img(pt.G_final_pred_canvas, pt.m_grid).clip(min=0, max=1)复制

定义优化循环

def optimize_x(pt): pt._load_checkpoint() pt.net_G.eval() pt.initialize_params() pt.x_ctt.requires_grad = True pt.x_color.requires_grad = True pt.x_alpha.requires_grad = True utils.set_requires_grad(pt.net_G, False) pt.optimizer_x = optim.RMSprop([pt.x_ctt, pt.x_color, pt.x_alpha], lr=pt.lr) print('begin to draw...') pt.step_id = 0 for pt.anchor_id in range(0, pt.m_strokes_per_block): pt.stroke_sampler(pt.anchor_id) iters_per_stroke = 20 if pt.anchor_id == pt.m_strokes_per_block - 1: iters_per_stroke = 40 for i in range(iters_per_stroke): pt.optimizer_x.zero_grad() pt.x_ctt.data = torch.clamp(pt.x_ctt.data, 0.1, 1 - 0.1) pt.x_color.data = torch.clamp(pt.x_color.data, 0, 1) pt.x_alpha.data = torch.clamp(pt.x_alpha.data, 0, 1) if args.canvas_color == 'white': pt.G_pred_canvas = torch.ones([args.m_grid ** 2, 3, 128, 128]).to(device) else: pt.G_pred_canvas = torch.zeros(args.m_grid ** 2, 3, 128, 128).to(device) pt._forward_pass() _drawing_step_states(pt) pt._backward_x() pt.optimizer_x.step() pt.x_ctt.data = torch.clamp(pt.x_ctt.data, 0.1, 1 - 0.1) pt.x_color.data = torch.clamp(pt.x_color.data, 0, 1) pt.x_alpha.data = torch.clamp(pt.x_alpha.data, 0, 1) pt.step_id += 1 v = pt.x.detach().cpu().numpy() pt._save_stroke_params(v) v_n = pt._normalize_strokes(pt.x) pt.final_rendered_images = pt._render_on_grids(v_n) pt._save_rendered_images()复制

处理图片,可能需要一些时间,建议使用 32 GB+ 显存

pt = Painter(args=args) optimize_x(pt)复制

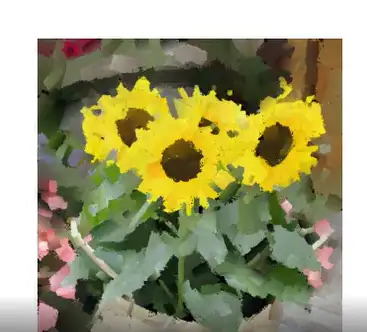

Check out your results at args.output_dir. Before you download that folder, let’s first have a look at what the generated painting looks like.

plt.subplot(1,2,1) plt.imshow(pt.img_), plt.title('input') plt.subplot(1,2,2) plt.imshow(pt.final_rendered_images[-1]), plt.title('generated') plt.show()复制

请下载 args.output_dir 目录到本地查看高分辨率的生成结果/

# 将渲染进度用动交互画形式展现 import matplotlib.animation as animation from IPython.display import HTML fig = plt.figure(figsize=(4,4)) plt.axis('off') ims = [[plt.imshow(img, animated=True)] for img in pt.final_rendered_images[::10]] ani = animation.ArtistAnimation(fig, ims, interval=50) # HTML(ani.to_jshtml()) HTML(ani.to_html5_video())复制

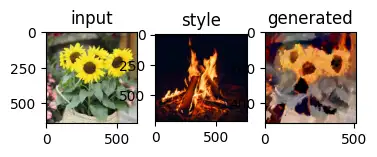

Next, let’s play style-transfer. Since we frame our stroke prediction under a parameter searching paradigm, our method naturally fits the neural style transfer framework.

接下来,让我们尝试风格迁移,由于我们是在参数搜索范式下构建的笔画预测,因此我们的方法自然的适用于神经风格迁移框架

# 配置 args.content_img_path = './test_images/sunflowers.jpg' # 输入图片的路径(原始的输入图片) args.style_img_path = './style_images/fire.jpg' # 风格图片路径 args.vector_file = './output/sunflowers_strokes.npz' # 预生成笔划向量文件的路径 args.transfer_mode = 1 # 风格迁移模式,0:颜色迁移,1:迁移颜色和纹理 args.beta_L1 = 1.0 # L1 loss 权重 args.beta_sty = 0.5 # vgg style loss 权重 args.net_G = 'zou-fusion-net' # 渲染器架构 args.renderer_checkpoint_dir = './checkpoints_G_oilpaintbrush' # 预训练模型路径 args.lr = 0.005 # 笔划搜寻的学习率 args.output_dir = './output' # 输出路径复制

Again, Let’s define a helper funtion to check the style transfer status.

def _style_transfer_step_states(pt): acc = pt._compute_acc().item() print('running style transfer... iteration step %d, G_loss: %.5f, step_psnr: %.5f' % (pt.step_id, pt.G_loss.item(), acc)) vis2 = utils.patches2img(pt.G_final_pred_canvas, pt.m_grid).clip(min=0, max=1)复制

定义优化循环

def optimize_x(pt): pt._load_checkpoint() pt.net_G.eval() if args.transfer_mode == 0: # transfer color only pt.x_ctt.requires_grad = False pt.x_color.requires_grad = True pt.x_alpha.requires_grad = False else: # transfer both color and texture pt.x_ctt.requires_grad = True pt.x_color.requires_grad = True pt.x_alpha.requires_grad = True pt.optimizer_x_sty = optim.RMSprop([pt.x_ctt, pt.x_color, pt.x_alpha], lr=pt.lr) iters_per_stroke = 100 for i in range(iters_per_stroke): pt.optimizer_x_sty.zero_grad() pt.x_ctt.data = torch.clamp(pt.x_ctt.data, 0.1, 1 - 0.1) pt.x_color.data = torch.clamp(pt.x_color.data, 0, 1) pt.x_alpha.data = torch.clamp(pt.x_alpha.data, 0, 1) if args.canvas_color == 'white': pt.G_pred_canvas = torch.ones([pt.m_grid*pt.m_grid, 3, 128, 128]).to(device) else: pt.G_pred_canvas = torch.zeros(pt.m_grid*pt.m_grid, 3, 128, 128).to(device) pt._forward_pass() _style_transfer_step_states(pt) pt._backward_x_sty() pt.optimizer_x_sty.step() pt.x_ctt.data = torch.clamp(pt.x_ctt.data, 0.1, 1 - 0.1) pt.x_color.data = torch.clamp(pt.x_color.data, 0, 1) pt.x_alpha.data = torch.clamp(pt.x_alpha.data, 0, 1) pt.step_id += 1 print('saving style transfer result...') v_n = pt._normalize_strokes(pt.x) pt.final_rendered_images = pt._render_on_grids(v_n) file_dir = os.path.join( args.output_dir, args.content_img_path.split('/')[-1][:-4]) plt.imsave(file_dir + '_style_img_' + args.style_img_path.split('/')[-1][:-4] + '.png', pt.style_img_) plt.imsave(file_dir + '_style_transfer_' + args.style_img_path.split('/')[-1][:-4] + '.png', pt.final_rendered_images[-1])复制

运行风格迁移

pt = NeuralStyleTransfer(args=args) optimize_x(pt)复制

高分辨率生成文件保存在 args.output_dir。

让我们预览一下输出结果:

plt.subplot(1,3,1) plt.imshow(pt.img_), plt.title('input') plt.subplot(1,3,2) plt.imshow(pt.style_img_), plt.title('style') plt.subplot(1,3,3) plt.imshow(pt.final_rendered_images[-1]), plt.title('generated') plt.show()复制