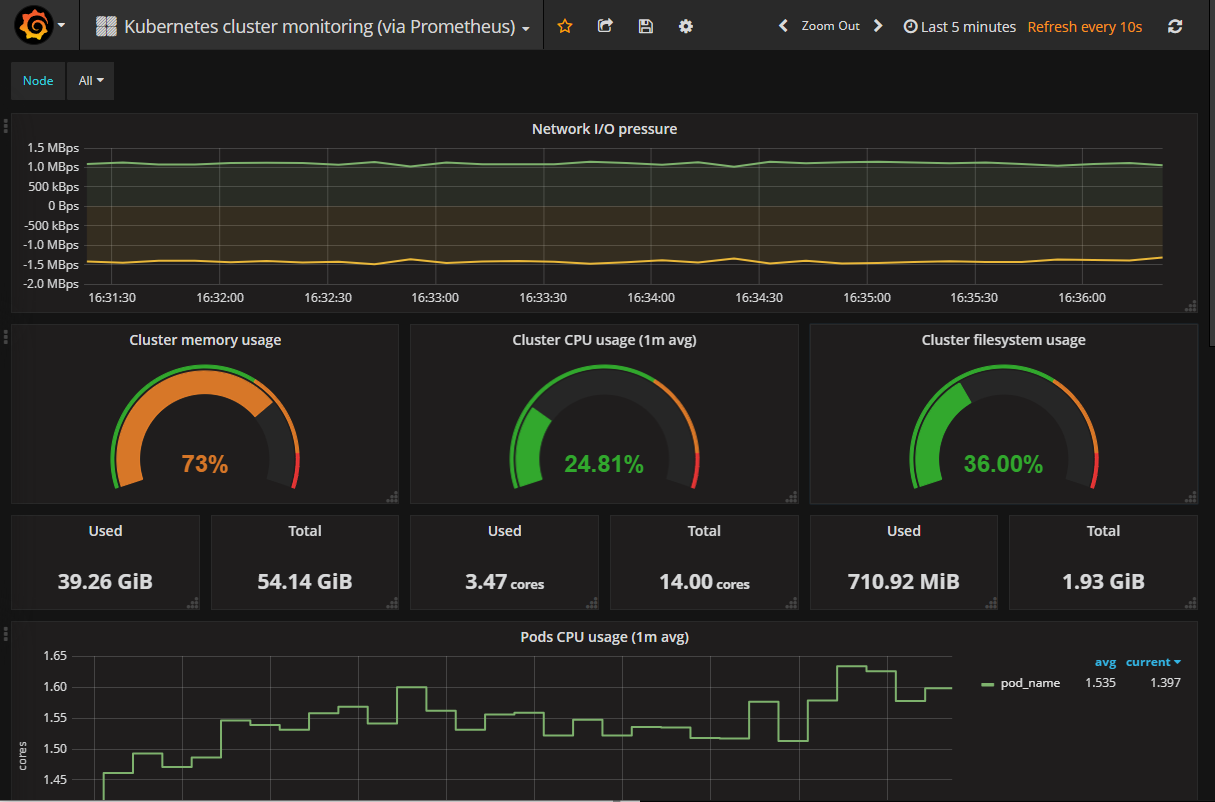

监控指标

集群监控

Pod 监控

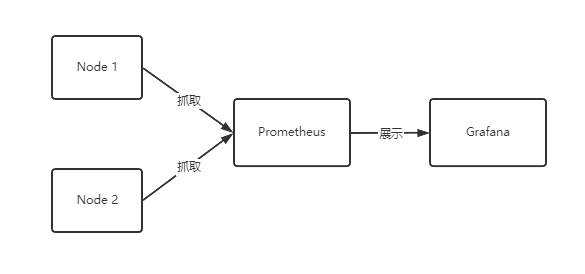

开源的

监控、报警、数据库

以HTTP协议周期性抓取被监控组件状态

不需要复杂的集成过程,使用http接口接入就可以了

开源的数据分析和可视化工具

支持多种数据源

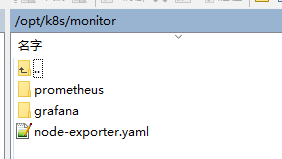

将 Yaml 传到 linux 服务器

node-exporter.yaml

prometheus

--configmap.yaml

--prometheus.deploy.yml

--prometheus.svc.yml

--rbac-setup.yaml

grafana

--grafana-deploy.yaml

--grafana-ing.yaml

--grafana-svc.yaml

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-system

labels:

k8s-app: node-exporter

spec:

selector:

matchLabels:

k8s-app: node-exporter

template:

metadata:

labels:

k8s-app: node-exporter

spec:

containers:

- image: prom/node-exporter

name: node-exporter

ports:

- containerPort: 9100

protocol: TCP

name: http

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: node-exporter

name: node-exporter

namespace: kube-system

spec:

ports:

- name: http

port: 9100

nodePort: 31672

protocol: TCP

type: NodePort

selector:

k8s-app: node-exporter

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-services'

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: 'kubernetes-ingresses'

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: prometheus-deployment

name: prometheus

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- image: prom/prometheus:v2.0.0

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=24h"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/prometheus"

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 2500Mi

serviceAccountName: prometheus

volumes:

- name: data

emptyDir: {}

- name: config-volume

configMap:

name: prometheus-config

---

kind: Service

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus

namespace: kube-system

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30303

selector:

app: prometheus

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana-core

namespace: kube-system

labels:

app: grafana

component: core

spec:

replicas: 1

selector:

matchLabels:

app: grafana

component: core

template:

metadata:

labels:

app: grafana

component: core

spec:

containers:

- image: grafana/grafana:4.2.0

name: grafana-core

imagePullPolicy: IfNotPresent

# env:

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

env:

# The following env variables set up basic auth twith the default admin user and admin password.

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

# - name: GF_AUTH_ANONYMOUS_ORG_ROLE

# value: Admin

# does not really work, because of template variables in exported dashboards:

# - name: GF_DASHBOARDS_JSON_ENABLED

# value: "true"

readinessProbe:

httpGet:

path: /login

port: 3000

# initialDelaySeconds: 30

# timeoutSeconds: 1

volumeMounts:

- name: grafana-persistent-storage

mountPath: /var

volumes:

- name: grafana-persistent-storage

emptyDir: {}

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grafana

namespace: kube-system

spec:

rules:

- host: k8s.grafana

http:

paths:

- path: /

backend:

serviceName: grafana

servicePort: 3000

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-system

labels:

app: grafana

component: core

spec:

type: NodePort

ports:

- port: 3000

selector:

app: grafana

component: core

[root@k8smaster monitor]# ls

grafana node-exporter.yaml prometheus

# 安装 Prometheus

[root@k8smaster monitor]# kubectl create -f prometheus/rbac-setup.yaml

clusterrole.rbac.authorization.k8s.io/prometheus created

serviceaccount/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

[root@k8smaster monitor]# kubectl create -f node-exporter.yaml

daemonset.apps/node-exporter created

service/node-exporter created

[root@k8smaster monitor]# kubectl create -f prometheus/configmap.yaml

configmap/prometheus-config created

[root@k8smaster monitor]# kubectl create -f prometheus/prometheus.deploy.yml

deployment.apps/prometheus created

[root@k8smaster monitor]# kubectl create -f prometheus/prometheus.svc.yml

service/prometheus created

# 安装 Grafana

[root@k8smaster monitor]# kubectl create -f grafana/grafana-deploy.yaml

deployment.apps/grafana-core created

[root@k8smaster monitor]# kubectl create -f grafana/grafana-svc.yaml

service/grafana created

[root@k8smaster monitor]# kubectl create -f grafana/grafana-ing.yaml

ingress.extensions/grafana created

# 查看状态

[root@k8smaster monitor]# kubectl get pod,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-7ff77c879f-jzlk2 1/1 Running 3 46d

pod/coredns-7ff77c879f-lkcdc 1/1 Running 1 7d

pod/etcd-k8smaster 1/1 Running 2 46d

pod/grafana-core-768b6bf79c-cql42 1/1 Running 0 2m17s

pod/kube-apiserver-k8smaster 1/1 Running 2 46d

pod/kube-controller-manager-k8smaster 1/1 Running 3 46d

pod/kube-proxy-2245f 1/1 Running 11 46d

pod/kube-proxy-4rlp8 1/1 Running 7 46d

pod/kube-proxy-c8fq4 1/1 Running 0 5d2h

pod/kube-proxy-gtfts 1/1 Running 2 46d

pod/kube-scheduler-k8smaster 1/1 Running 4 46d

pod/metrics-server-655cb9c58b-xcxzw 1/1 Running 17 5d2h

pod/node-exporter-52hwv 1/1 Running 0 34m

pod/node-exporter-mj8tk 1/1 Running 0 34m

pod/node-exporter-n2ctw 1/1 Running 0 34m

pod/prometheus-7486bf7f4b-4f7vt 1/1 Running 0 3m

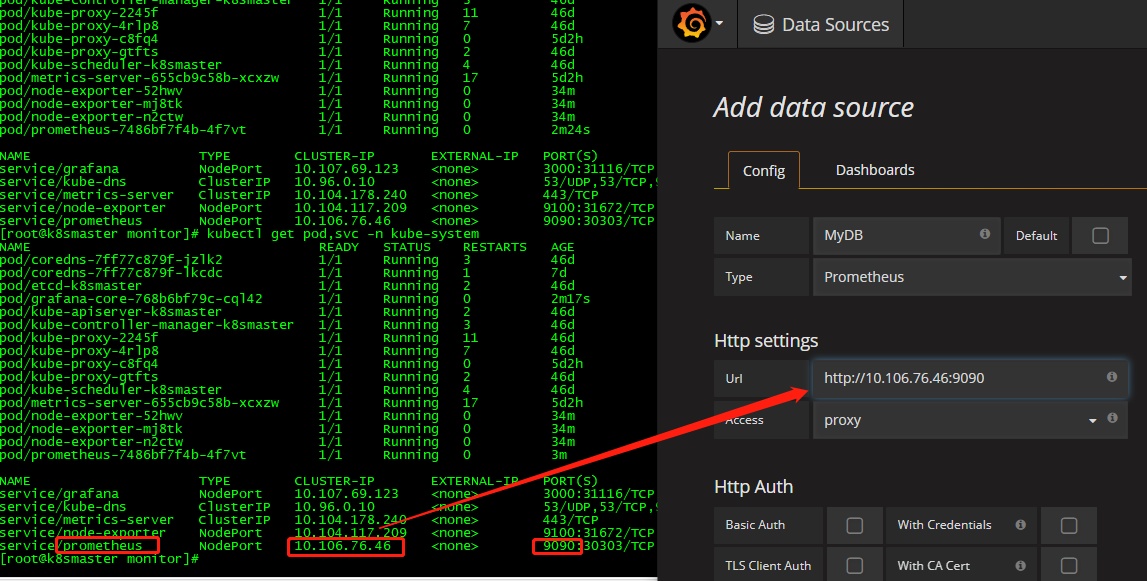

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana NodePort 10.107.69.123 <none> 3000:31116/TCP 2m8s

service/kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 46d

service/metrics-server ClusterIP 10.104.178.240 <none> 443/TCP 11d

service/node-exporter NodePort 10.104.117.209 <none> 9100:31672/TCP 34m

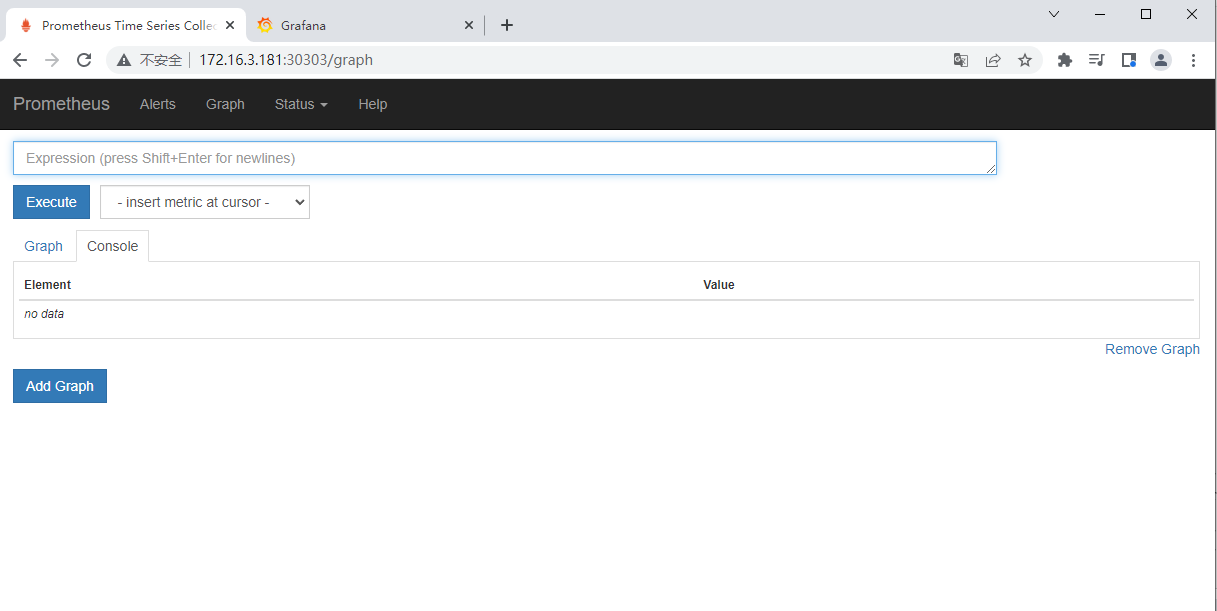

service/prometheus NodePort 10.106.76.46 <none> 9090:30303/TCP 13m

[root@k8smaster monitor]#

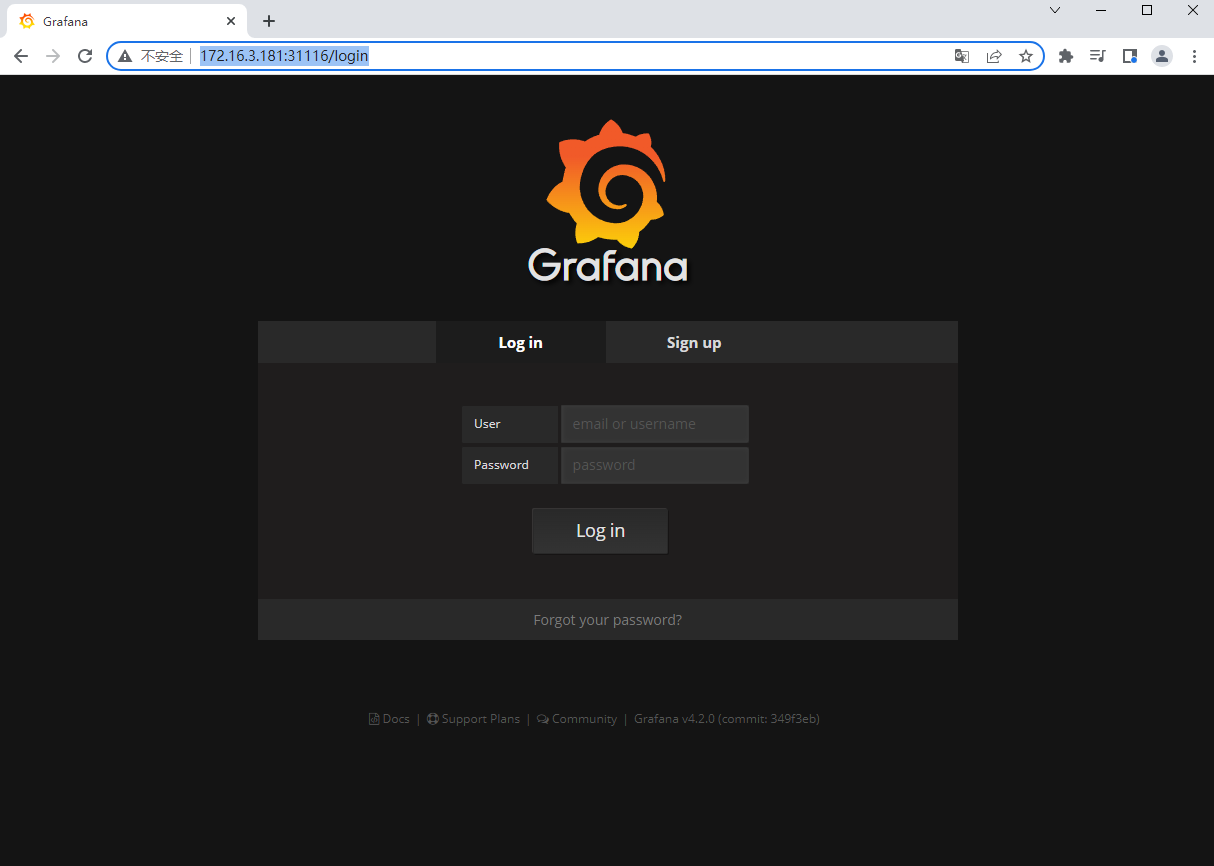

默认用户名:admin 密码:admin

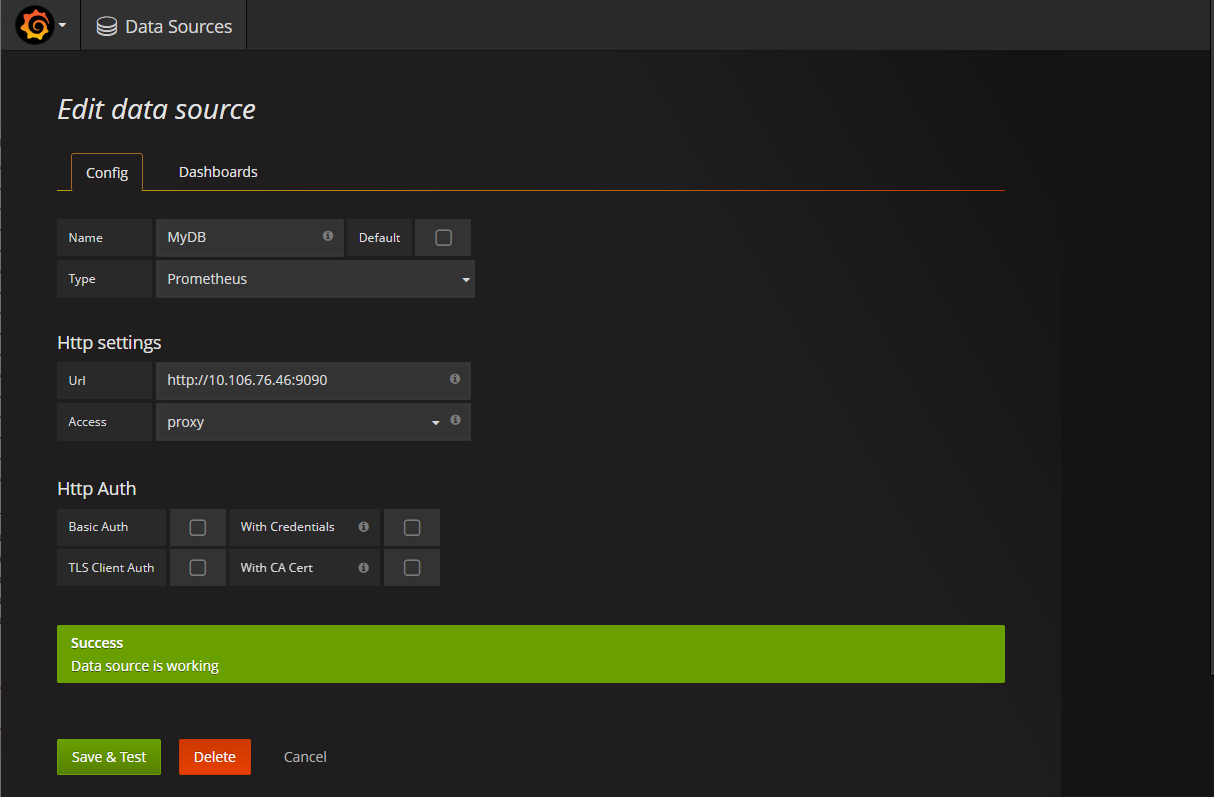

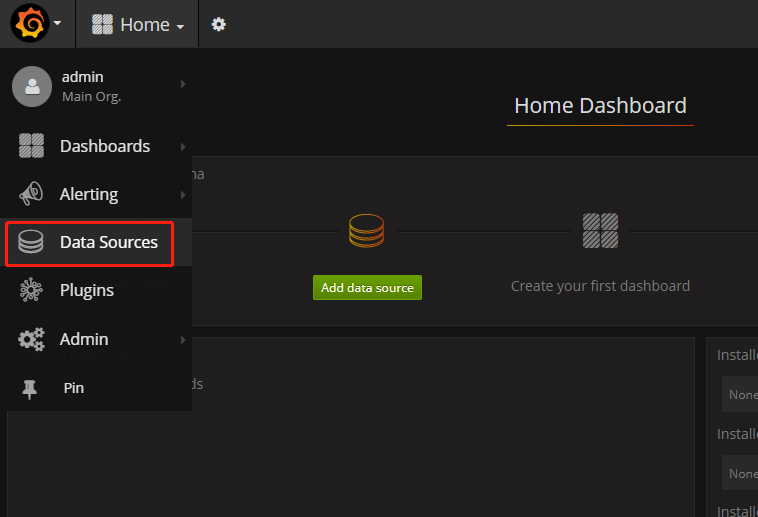

配置数据源,

点击 Add