这里分类和汇总了欣宸的全部原创(含配套源码):https://github.com/zq2599/blog_demos

kubectl create namespace kafka复制

kubectl create -f 'https://strimzi.io/install/latest?namespace=kafka' -n kafka复制

kubectl apply -f https://strimzi.io/examples/latest/kafka/kafka-persistent-single.yaml -n kafka复制

kubectl apply -f https://strimzi.io/examples/latest/kafka/kafka-ephemeral-single.yaml -n kafka复制

[root@VM-12-12-centos ~]# kubectl get pod -n kafka NAME READY STATUS RESTARTS AGE strimzi-cluster-operator-566948f58c-h2t6g 0/1 ContainerCreating 0 16m复制

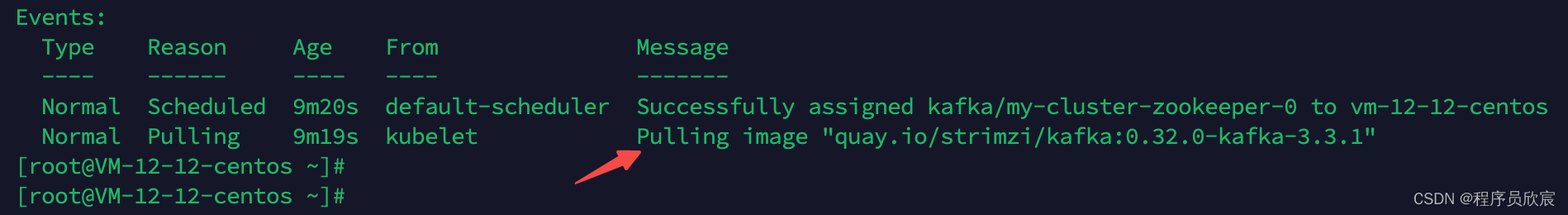

[root@VM-12-12-centos ~]# kubectl get pods -n kafka NAME READY STATUS RESTARTS AGE my-cluster-zookeeper-0 0/1 ContainerCreating 0 7m59s my-cluster-zookeeper-1 0/1 ContainerCreating 0 7m59s my-cluster-zookeeper-2 0/1 ContainerCreating 0 7m59s strimzi-cluster-operator-566948f58c-h2t6g 1/1 Running 0 24m复制

[root@VM-12-12-centos ~]# kubectl get pods -n kafka NAME READY STATUS RESTARTS AGE my-cluster-entity-operator-66598599fc-sskcx 3/3 Running 0 73s my-cluster-kafka-0 1/1 Running 0 96s my-cluster-zookeeper-0 1/1 Running 0 14m my-cluster-zookeeper-1 1/1 Running 0 14m my-cluster-zookeeper-2 1/1 Running 0 14m strimzi-cluster-operator-566948f58c-h2t6g 1/1 Running 0 30m复制

kubectl -n kafka \ run kafka-producer \ -ti \ --image=quay.io/strimzi/kafka:0.32.0-kafka-3.3.1 \ --rm=true \ --restart=Never \ -- bin/kafka-console-producer.sh --bootstrap-server my-cluster-kafka-bootstrap:9092 --topic my-topic复制

kubectl -n kafka \ run kafka-consumer \ -ti \ --image=quay.io/strimzi/kafka:0.32.0-kafka-3.3.1 \ --rm=true \ --restart=Never \ -- bin/kafka-console-consumer.sh --bootstrap-server my-cluster-kafka-bootstrap:9092 --topic my-topic --from-beginning复制

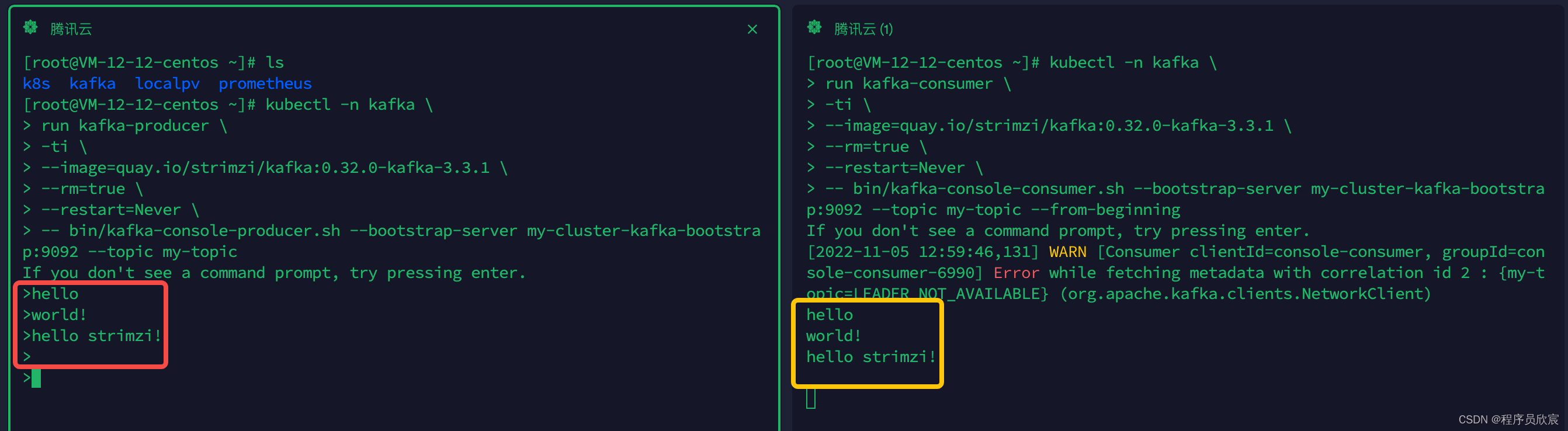

然后,在发送消息的窗口输入一些文字后再回车,消息就会发送出去,如下图,左侧红框显示一共发送了四次消息,最后一次是空字符串,右侧黄框显示成功收到四条消息

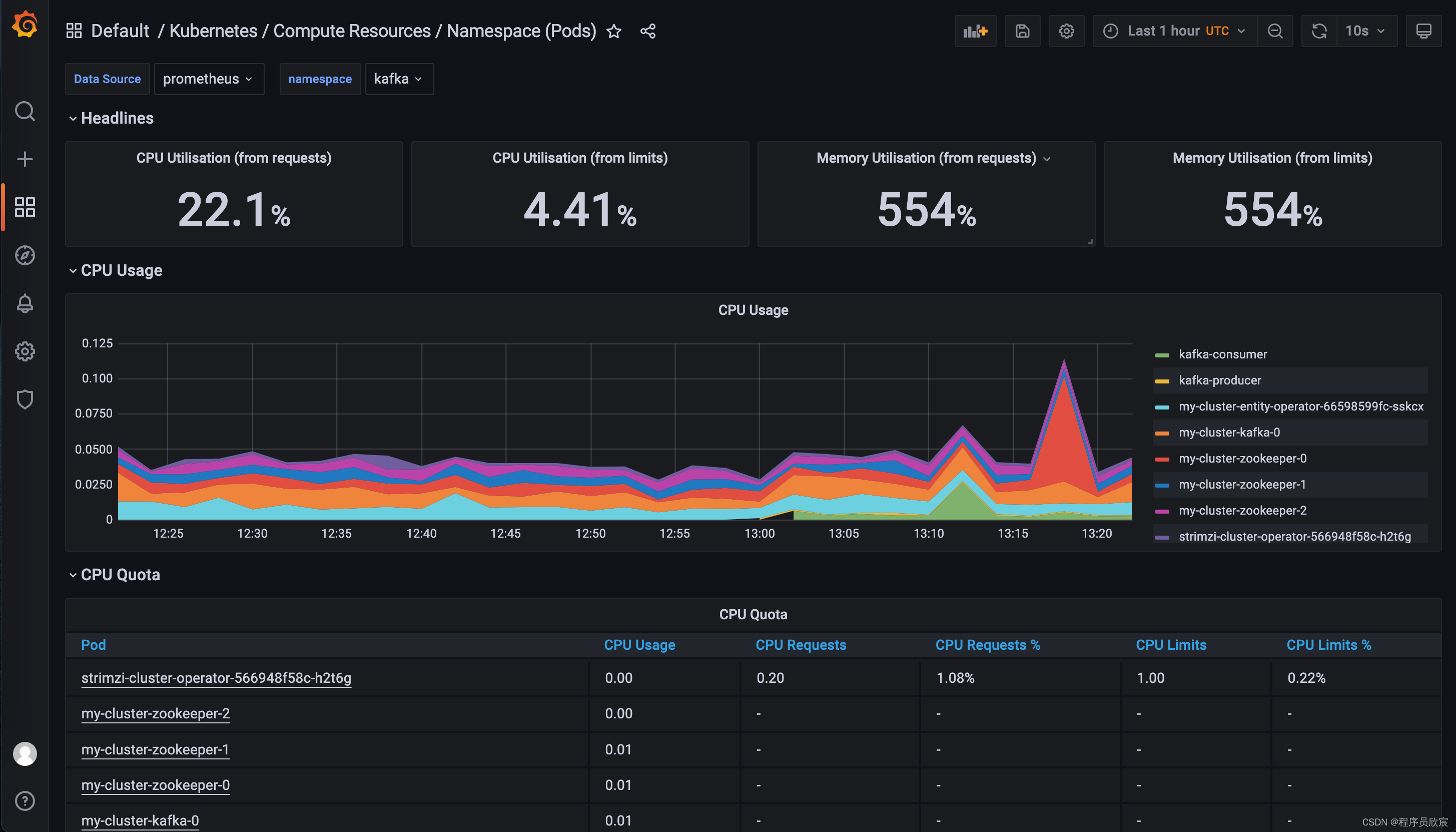

如果您的kubernetes环境是按照《快速搭建云原生开发环境(k8s+pv+prometheus+grafana)》的方法来部署的,现在就能通过grafana看到命名空间kafka下面的资源了,如下图

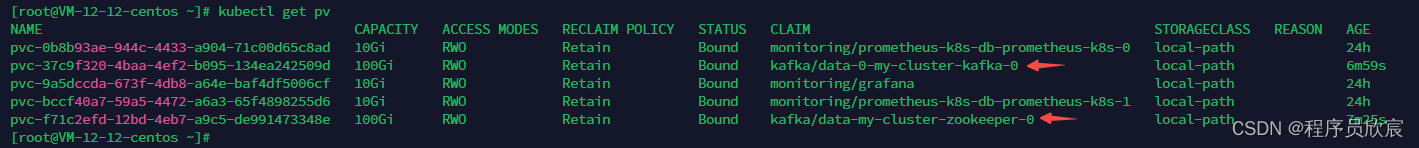

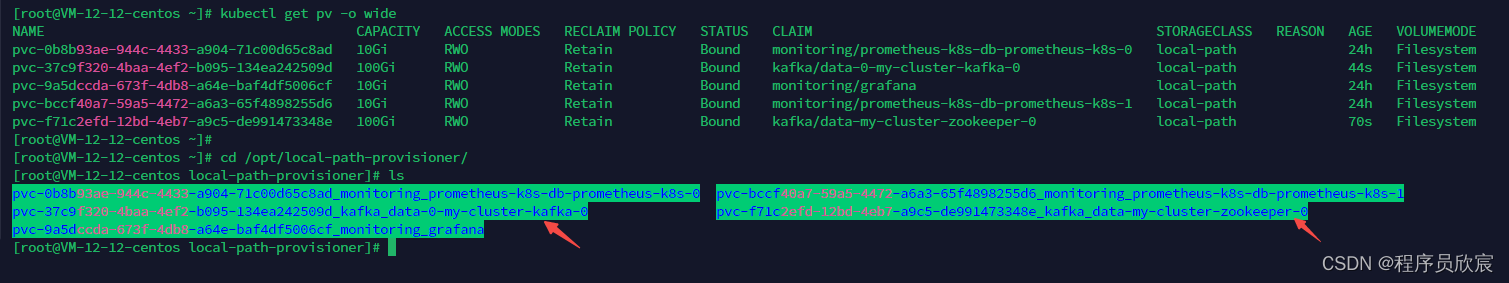

另外,如果您使用了pv,还可以关注一下pv的使用情况,如下图,kafka的zookeeper的数据都改为外部存储了,数据不会因为pod问题而丢失

不过由于我们还没有将strimzi的监控配置好,现在还看不到kafka业务相关的指标情况,只能从k8s维度去查看pod的基本指标,这些会在后面的章节补齐

kubectl delete -f https://strimzi.io/examples/latest/kafka/kafka-persistent-single.yaml -n kafka \ && kubectl delete -f 'https://strimzi.io/install/latest?namespace=kafka' -n kafka \ && kubectl delete namespace kafka复制

kubectl delete -f https://strimzi.io/examples/latest/kafka/kafka-ephemeral-single.yaml -n kafka \ && kubectl delete -f 'https://strimzi.io/install/latest?namespace=kafka' -n kafka \ && kubectl delete namespace kafka复制

[root@VM-12-12-centos ~]# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE calico-apiserver calico-apiserver-67b7856948-bg2wh 1/1 Running 0 6d2h calico-apiserver calico-apiserver-67b7856948-fz64n 1/1 Running 0 6d2h calico-system calico-kube-controllers-78687bb75f-z2r7m 1/1 Running 0 6d2h calico-system calico-node-l6nmw 1/1 Running 0 6d2h calico-system calico-typha-b46ff96f6-qqzxb 1/1 Running 0 6d2h calico-system csi-node-driver-lv2g2 2/2 Running 0 6d2h kafka my-cluster-entity-operator-66598599fc-fz7wx 3/3 Running 0 4m57s kafka my-cluster-kafka-0 1/1 Running 0 5m22s kafka my-cluster-zookeeper-0 1/1 Running 0 5m48s kafka strimzi-cluster-operator-566948f58c-pj45s 1/1 Running 0 6m15s kube-system coredns-78fcd69978-57r7x 1/1 Running 0 6d2h kube-system coredns-78fcd69978-psjcs 1/1 Running 0 6d2h kube-system etcd-vm-12-12-centos 1/1 Running 0 6d2h kube-system kube-apiserver-vm-12-12-centos 1/1 Running 0 6d2h kube-system kube-controller-manager-vm-12-12-centos 1/1 Running 0 6d2h kube-system kube-proxy-x8nhg 1/1 Running 0 6d2h kube-system kube-scheduler-vm-12-12-centos 1/1 Running 0 6d2h local-path-storage local-path-provisioner-55d894cf7f-mpd2n 1/1 Running 0 3d21h monitoring alertmanager-main-0 2/2 Running 0 24h monitoring alertmanager-main-1 2/2 Running 0 24h monitoring alertmanager-main-2 2/2 Running 0 24h monitoring blackbox-exporter-6798fb5bb4-4hmf7 3/3 Running 0 24h monitoring grafana-d9c6954b-qts2s 1/1 Running 0 24h monitoring kube-state-metrics-5fcb7d6fcb-szmh9 3/3 Running 0 24h monitoring node-exporter-4fhb6 2/2 Running 0 24h monitoring prometheus-adapter-7dc46dd46d-245d7 1/1 Running 0 24h monitoring prometheus-adapter-7dc46dd46d-sxcn2 1/1 Running 0 24h monitoring prometheus-k8s-0 2/2 Running 0 24h monitoring prometheus-k8s-1 2/2 Running 0 24h monitoring prometheus-operator-7ddc6877d5-d76wk 2/2 Running 0 24h tigera-operator tigera-operator-6f669b6c4f-t8t9h 1/1 Running 0 6d2h复制